a streaming interface for archive generation

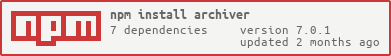

npm install archiver --savevar archiver = require('archiver');

var archive = archiver.create('zip', {}); // or archiver('zip', {});Inherits Transform Stream methods.

Creates an Archiver instance based on the format (zip, tar, etc) passed. Parameters can be passed directly to Archiver constructor for convenience.

Aborts the archiving process, taking a best-effort approach, by:

- removing any pending queue tasks

- allowing any active queue workers to finish

- detaching internal module pipes

- ending both sides of the Transform stream

It will NOT drain any remaining sources.

Appends an input source (text string, buffer, or stream) to the instance. When the instance has received, processed, and emitted the input, the entry event is fired.

Replaced #addFile in v0.5.

archive.append('string', { name:'string.txt' });

archive.append(new Buffer('string'), { name:'buffer.txt' });

archive.append(fs.createReadStream('mydir/file.txt'), { name:'stream.txt' });

archive.append(null, { name:'dir/' });Appends multiple entries from passed array of src-dest mappings. A lazystream wrapper is used to prevent issues with open file limits.

Globbing patterns are supported through use of the bundled file-utils module.

The data property can be set (per src-dest mapping) to define data for matched entries.

archive.bulk([

{ src: ['mydir/**'], data: { date: new Date() } },

{ src: ['mydir/**'], data: function(data) {

data.date = new Date();

return data;

}},

{ expand: true, cwd: 'mydir', src: ['**'], dest: 'newdir' }

]);As of v0.15, the data property can also be a function that receives data for each matched entry and is expected to return it after making any desired adjustments.

For more detail on this feature, please see BULK.md.

Appends a directory and its files, recusively, given its dirpath. This is meant to be a simplier approach to something previously only possible with bulk. The use of destpath allows one to define a custom destination path within the resulting archive and data allows for setting data on each entry appended.

// mydir/ -> archive.ext/mydir/

archive.directory('mydir');

// mydir/ -> archive.ext/abc/

archive.directory('mydir', 'abc');

// mydir/ -> archive.ext/

archive.directory('mydir', false, { date: new Date() });

archive.directory('mydir', false, function(data) {

data.date = new Date();

return data;

});As of v0.15, the data property can also be a function that receives data for each entry and is expected to return it after making any desired adjustments.

Appends a file given its filepath using a lazystream wrapper to prevent issues with open file limits. When the instance has received, processed, and emitted the file, the entry event is fired.

archive.file('mydir/file.txt', { name:'file.txt' });Finalizes the instance and prevents further appending to the archive structure (queue will continue til drained). The end, close or finish events on the destination stream may fire right after calling this method so you should set listeners beforehand to properly detect stream completion.

You must call this method to get a valid archive and end the instance stream.

Returns the current byte length emitted by archiver. Use this in your end callback to log generated size.

Add a plugin to the middleware stack. Currently this is designed for passing the module to use (replaces registerFormat/setFormat/setModule)

Inherits Transform Stream events.

Fired when the entry's input has been processed and appended to the archive. Passes entry data as first argument.

Sets the zip comment.

Sets the number of workers used to process the internal fs stat queue. Defaults to 4.

If true, all entries will be archived without compression. Defaults to false.

Passed to node's zlib module to control compression. Options may vary by node version.

Sets the entry name including internal path.

Sets the entry date. This can be any valid date string or instance. Defaults to current time in locale.

When using the bulk or file methods, fs stat data is used as the default value.

If true, this entry will be archived without compression. Defaults to global store option.

Sets the entry comment.

Sets the entry permissions. Defaults to octal 0755 (directory) or 0644 (file).

When using the bulk or file methods, fs stat data is used as the default value.

Sets the fs stat data for this entry. This allows for reduction of fs stat calls when stat data is already known.

Compresses the tar archive using gzip, default is false.

Passed to node's zlib module to control compression. Options may vary by node version.

Sets the number of workers used to process the internal fs stat queue. Defaults to 4.

Sets the entry name including internal path.

Sets the entry date. This can be any valid date string or instance. Defaults to current time in locale.

When using the bulk or file methods, fs stat data is used as the default value.

Sets the entry permissions. Defaults to octal 0755 (directory) or 0644 (file).

When using the bulk or file methods, fs stat data is used as the default value.

Sets the fs stat data for this entry. This allows for reduction of fs stat calls when stat data is already known.

Archiver ships with out of the box support for TAR and ZIP archives.

You can register additional formats with registerFormat.

Formats will be changing in the next few releases to implement a middleware approach.

Archiver makes use of several libraries/modules to avoid duplication of efforts.