D3RoMa: Disparity Diffusion-based Depth Sensing for Material-Agnostic Robotic Manipulation

CoRL 2024, Munich, Germany.

This is the official repository of D3RoMa: Disparity Diffusion-based Depth Sensing for Material-Agnostic Robotic Manipulation.

For more information, please visit our project page.

Songlin Wei, Haoran Geng, Jiayi Chen, Congyue Deng, Wenbo Cui, Chengyang Zhao Xiaomeng Fang Leonidas Guibas He Wang

- We just release new model variant (Cond. on RGB+Raw), please checkout the updated inference.py

- Traning protocols and dataset

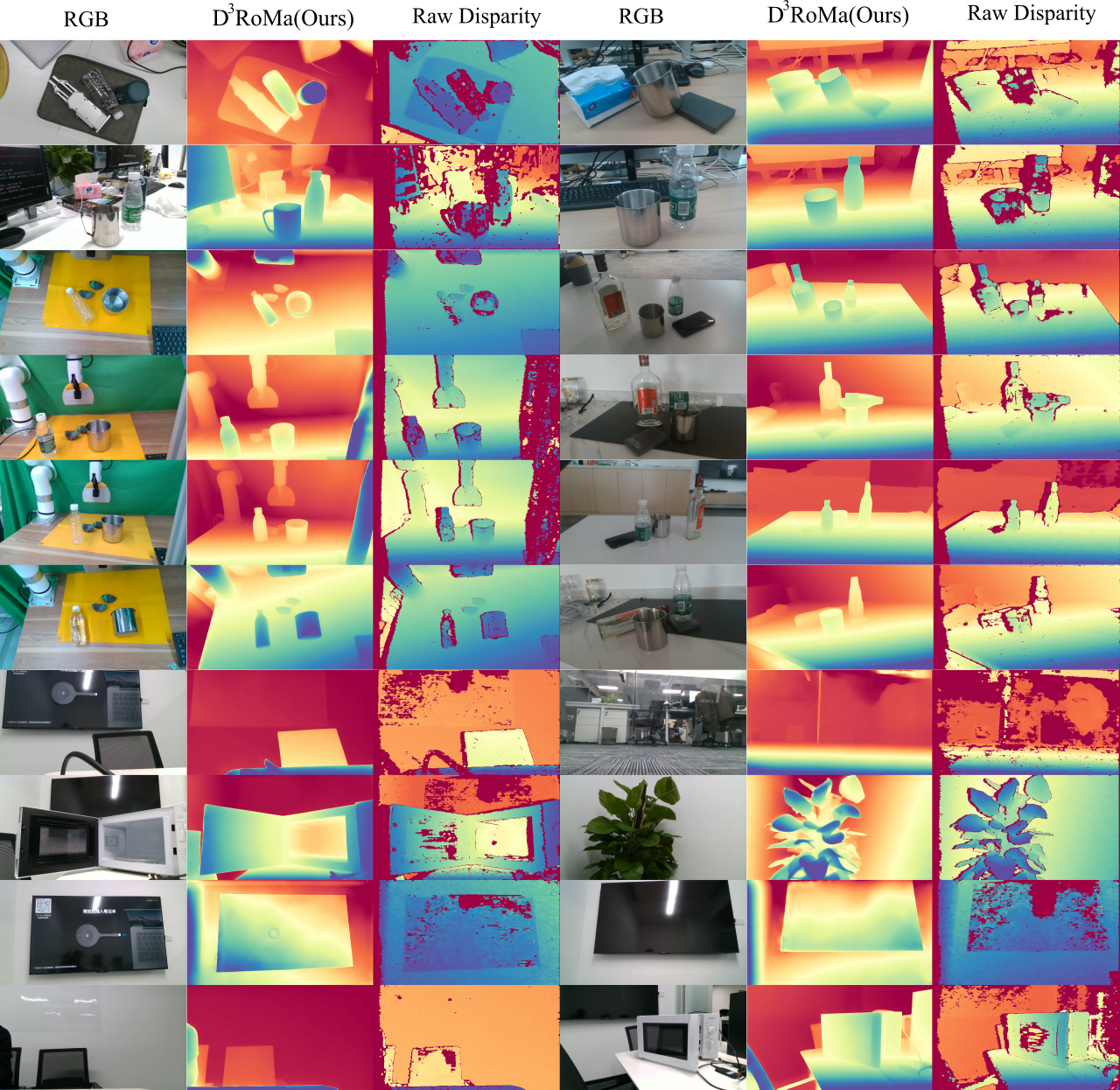

Our method robustly predicts transparent (bottles) and specular (basin and cups) object depths in tabletop environments and beyond.

conda create --name d3roma python=3.8

conda activate d3roma

# install dependencies with pip

pip install torch==1.12.1+cu113 torchvision==0.13.1+cu113 torchaudio==0.12.1 --extra-index-url https://download.pytorch.org/whl/cu113

pip install huggingface_hub==0.24.5

pip install diffusers opencv-python scikit-image matplotlib transformers datasets accelerate tensorboard imageio open3d kornia

pip install hydra-core --upgrade

- For model variant: Cond. Left+Right+Raw Google drive, 百度云

- For model variant: Cond. RGB+Raw Google drive, 百度云

# Download pretrained weigths from Google Drive

# Extract it under the project folder

You can run the following script to test our model:

python inference.py

This will generate three files under folder _output:

_outputs/pred.png: the pseudo colored depth map

_outputs/pred.ply: the pointcloud which ia obtained though back-projected the predicted depth

_outputs/raw.ply: the pointcloud which ia obtained though back-projected the camera raw depth

If you have any questions please contact us:

Songlin Wei: [email protected], Haoran Geng: [email protected], He Wang: [email protected]

@inproceedings{

wei2024droma,

title={D3RoMa: Disparity Diffusion-based Depth Sensing for Material-Agnostic Robotic Manipulation},

author={Songlin Wei and Haoran Geng and Jiayi Chen and Congyue Deng and Cui Wenbo and Chengyang Zhao and Xiaomeng Fang and Leonidas Guibas and He Wang},

booktitle={8th Annual Conference on Robot Learning},

year={2024},

url={https://openreview.net/forum?id=7E3JAys1xO}

}

This work and the dataset are licensed under CC BY-NC 4.0.