Barbershop: GAN-based Image Compositing using Segmentation Masks

Peihao Zhu, Rameen Abdal, John Femiani, Peter Wonka

arXiv | BibTeX | Project Page | Video

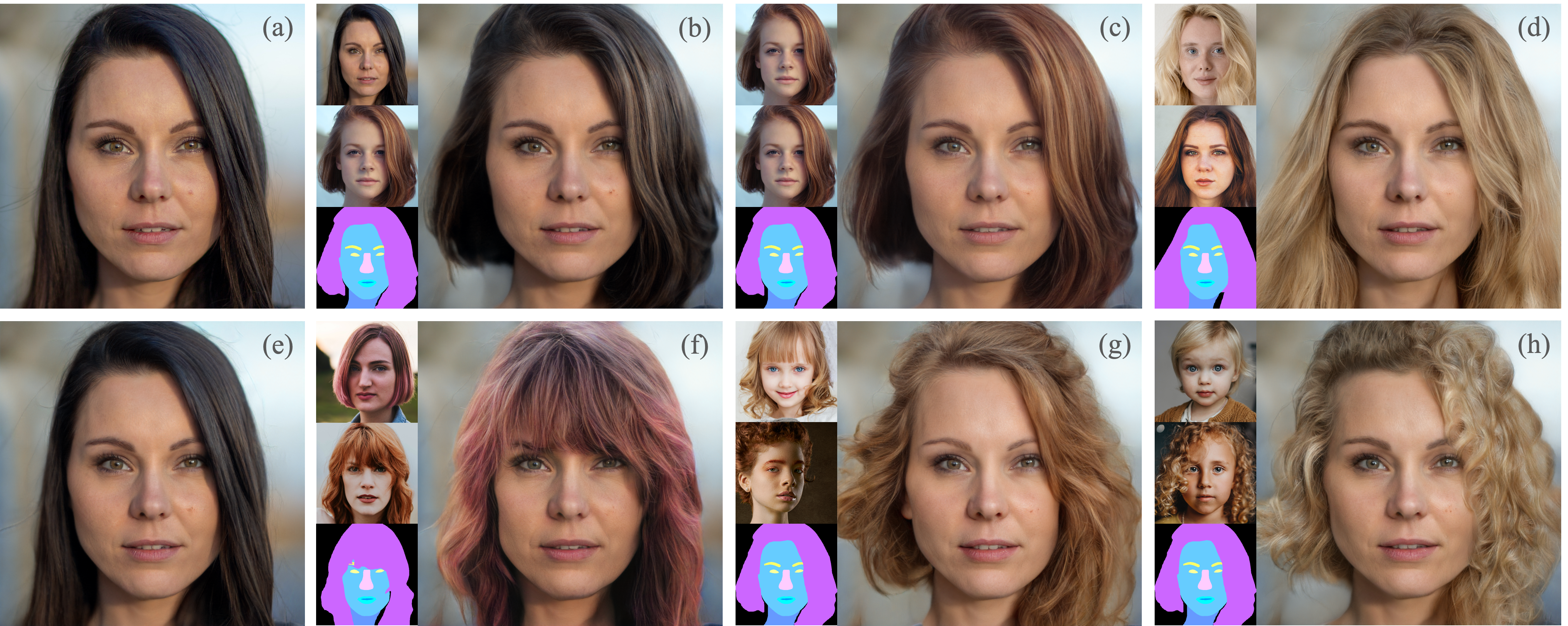

Abstract Seamlessly blending features from multiple images is extremely challenging because of complex relationships in lighting, geometry, and partial occlusion which cause coupling between different parts of the image. Even though recent work on GANs enables synthesis of realistic hair or faces, it remains difficult to combine them into a single, coherent, and plausible image rather than a disjointed set of image patches. We present a novel solution to image blending, particularly for the problem of hairstyle transfer, based on GAN-inversion. We propose a novel latent space for image blending which is better at preserving detail and encoding spatial information, and propose a new GAN-embedding algorithm which is able to slightly modify images to conform to a common segmentation mask. Our novel representation enables the transfer of the visual properties from multiple reference images including specific details such as moles and wrinkles, and because we do image blending in a latent-space we are able to synthesize images that are coherent. Our approach avoids blending artifacts present in other approaches and finds a globally consistent image. Our results demonstrate a significant improvement over the current state of the art in a user study, with users preferring our blending solution over 95 percent of the time.

This repository is a fork of the official implmentation of Barbershop. This repository build on the official reporsitory to add the following features:

- Combine

main.pyandalign_face.pyinto a single command line interface as part of the updatedmain.py. - Provide a notebook

inference.ipynbfor performing step-by-step inference and visualization of the result. - Add an integration with Weights & Biases, which enables the predictions to be visualized as a W&B Table. The integration works with both the script and the notebook.

- Clone the repository:

git clone https://github.com/ZPdesu/Barbershop.git

cd Barbershop

- Dependencies:

We recommend running this repository using Anaconda. All dependencies for defining the environment are provided inenvironment/environment.yaml.

Produce realistic results:

python main.py --identity_image 90.png --structure_image 15.png --appearance_image 117.png --sign realistic --smooth 5

Produce results faithful to the masks:

python main.py --identity_image 90.png --structure_image 15.png --appearance_image 117.png --sign fidelity --smooth 5

You can also use the Jupyter Notebook to producde the results. The results are now logged automatically as a Weights and Biases Table.

This code borrows heavily from II2S.

@misc{zhu2021barbershop,

title={Barbershop: GAN-based Image Compositing using Segmentation Masks},

author={Peihao Zhu and Rameen Abdal and John Femiani and Peter Wonka},

year={2021},

eprint={2106.01505},

archivePrefix={arXiv},

primaryClass={cs.CV}

}