The implementation for "Supporting Self-supervised Graph Contrastive Learning via Actively Adaptive Augmentation". The code is based on GraphCL.

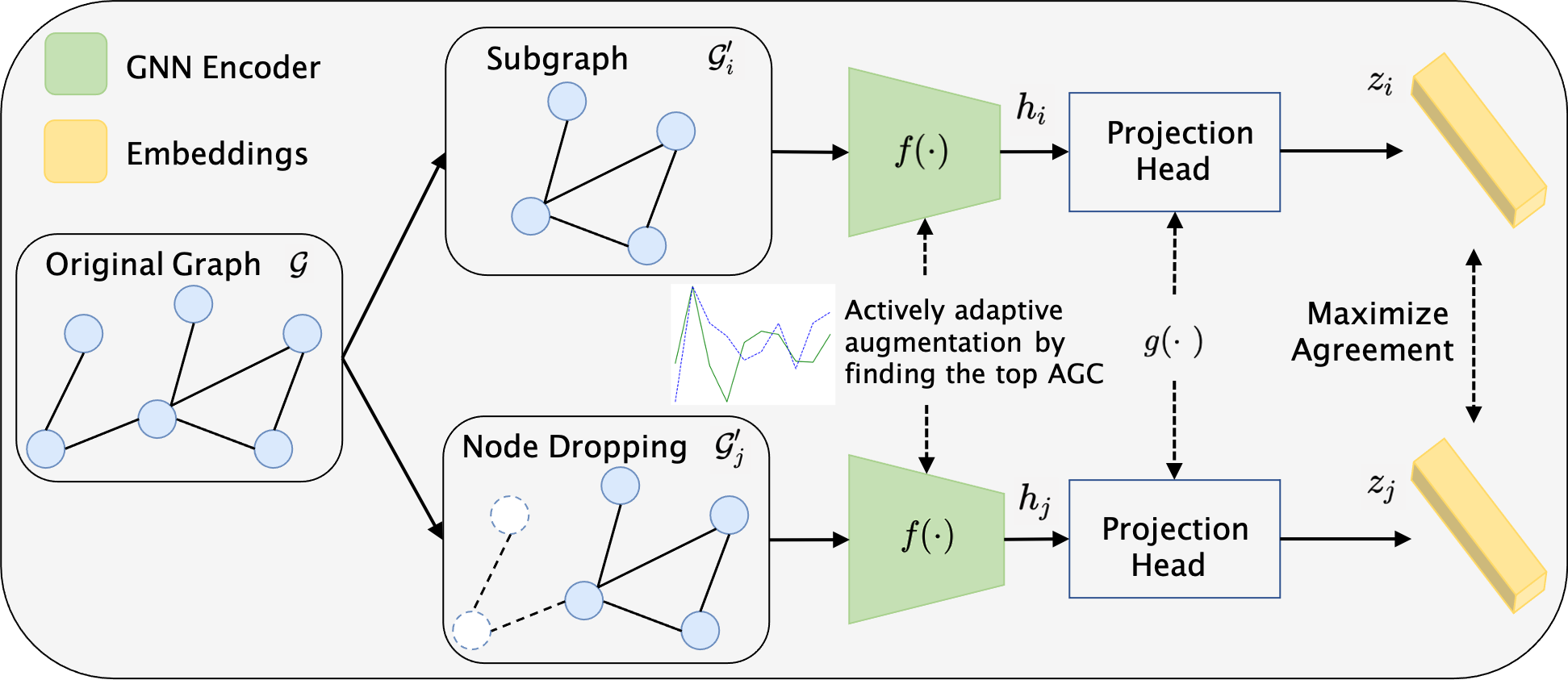

The figure below is the framework of A3-GCL. We first get graphs of different views via graph data augmentations. To maximize the agreement between positive pairs, we train a GNN encoder and a projection head via contrastive loss. We add actively adaptive augmentation to training. AGC is calculated during training, which can fill the gap between pre-training and downstream tasks.

For unsupervised and semi-supervised learning on different datasets, refer to READMEs respectively.