aws-vpc-flow-log-appender is a sample project that enriches AWS VPC Flow Log data with additional information, primarily the Security Groups associated with the instances to which requests are flowing.

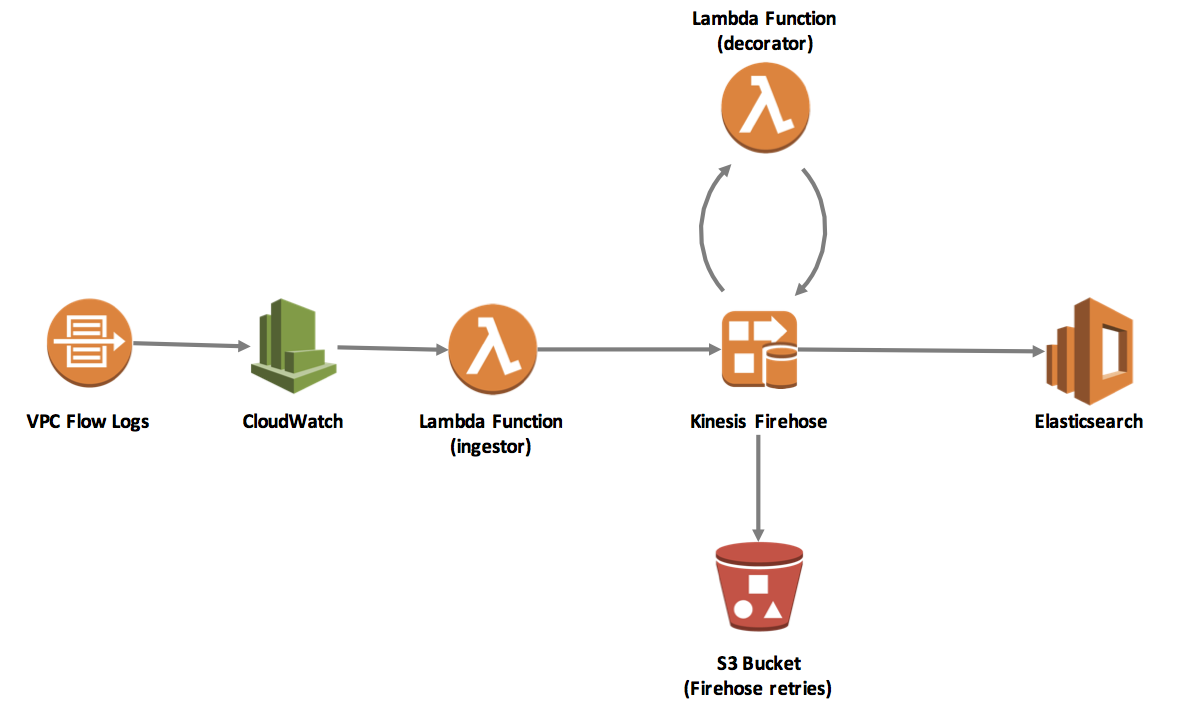

This project makes use of several AWS services, including Elasticsearch, Lambda, and Kinesis Firehose. These must be setup and configured in the proper sequence for the sample to work as expected. Here, we describe deployment of the Lambda components only. For details on deploying and configuring other services, please see the accompanying blog post.

The following diagram is a representation of the AWS services and components involved in this sample:

NOTE: This project makes use of a free geolocation service (http://freegeoip.net/ that enforces an hourly limit of 15,000 requests. It is not intended for use in a production environment. We recommend using a commercial source of IP geolocation data if you wish to run this code in such an environment.

To get started, clone this repository locally:

$ git clone https://github.com/awslabs/aws-vpc-flow-log-appender

The repository contains CloudFormation templates and source code to deploy and run the sample application.

To run the vpc-flow-log-appender sample, you will need to:

- Select an AWS Region into which you will deploy services. Be sure that all required services (AWS Lambda, Amazon Elastisearch Service, AWS CloudWatch, and AWS Kinesis Firehose) are available in the Region you select.

- Confirm your installation of the latest AWS CLI (at least version 1.11.21).

- Confirm the AWS CLI is properly configured with credentials that have administrator access to your AWS account.

- Install Node.js and NPM.

Before deploying the sample, install several dependencies using NPM:

$ cd vpc-flow-log-appender/decorator

$ npm install

$ cd ../ingestor

$ npm install

$ cd ..

The deployment of our AWS resources is managed by a CloudFormation template using AWS Serverless Application Model.

-

Create a new S3 bucket from which to deploy our source code (ensure that the bucket is created in the same AWS Region as your network and services will be deployed):

$ aws s3 mb s3://<MY_BUCKET_NAME> -

Using the Serverless Application Model, package your source code and serverless stack:

$ aws cloudformation package --template-file app-sam.yaml --s3-bucket <MY_BUCKET_NAME> --output-template-file app-sam-output.yaml -

Once packaging is complete, deploy the stack:

$ aws cloudformation deploy --template-file app-sam-output.yaml --stack-name vpc-flow-log-appender-dev --capabilities CAPABILITY_IAM -

Once we have deployed our Lambda functions, we need to return to CloudWatch and configure VPC Flow Logs to stream the data to the Lambda function. (TODO: add more detail)

In addition to running aws-vpc-flow-log-appender using live VPC Flow Log data from your own environment, we can also leverage the Kinesis Data Generator to send mock flow log data to our Kinesis Firehose instance.

To get started, review the Kinesis Data Generator Help and use the included CloudFormation template to create necessary resources.

When ready:

-

Navigate to your Kinesis Data Generator and login.

-

Select the Region to which you deployed aws-vpc-flow-log-appender and select the appropriate Stream (e.g. "VPCFlowLogsToElasticSearch"). Set Records per Second to 50.

-

Next, we will use the AWS CLI to retrieve several values specific to your AWS Account to generate feasible VPC Flow Log data:

# ACCOUNT_ID $ aws sts get-caller-identity --query 'Account' # ENI_ID (e.g. "eni-1a2b3c4d") $ aws ec2 describe-instances --query 'Reservations[0].Instances[0].NetworkInterfaces[0].NetworkInterfaceId' -

Finally, we can build a template for KDG using the following. Be sure to replace

<<ACOUNT_ID>>and<<ENI_ID>>with the values your captured in step 3 (do not include quotes).2 <<ACCOUNT_ID>> <<ENI_ID>> {{internet.ip}} 10.100.2.48 45928 6379 6 {{random.number(1)}} {{random.number(600)} 1493070293 1493070332 ACCEPT OK -

Returning back to KDG, copy and paste the mock VPC Flow Log data in Template 1. Then click the "Send data" button.

-

Stop KDG after a few seconds by clicking "Stop" in the popup.

-

After a few minutes, check CloudWatch Logs and your Elasticsearch cluster for data.

A few notes on the above test procedure:

- While our example utilizes the ENI ID of an EC2 instance, you may use any ENI available in the AWS Region in which you deployed the sample code.

- Feel free to tweak the mock data template if needed, this is only intended to be an example.

- Do not modify values in double curly braces, these are part of the KDG template and will automatically be filled.

To clean-up the Lambda functions when you are finished with this sample:

$ aws cloudformation delete-stack --stack-name vpc-flow-log-appender-dev

- Jun 9 2017 - Fixed issue in which decorator did not return all records to Firehose when geocoder was over 15,000 per hour limit. Instead, will return blank geo data. Added Test methodology.

- Josh Kahn - Initial work