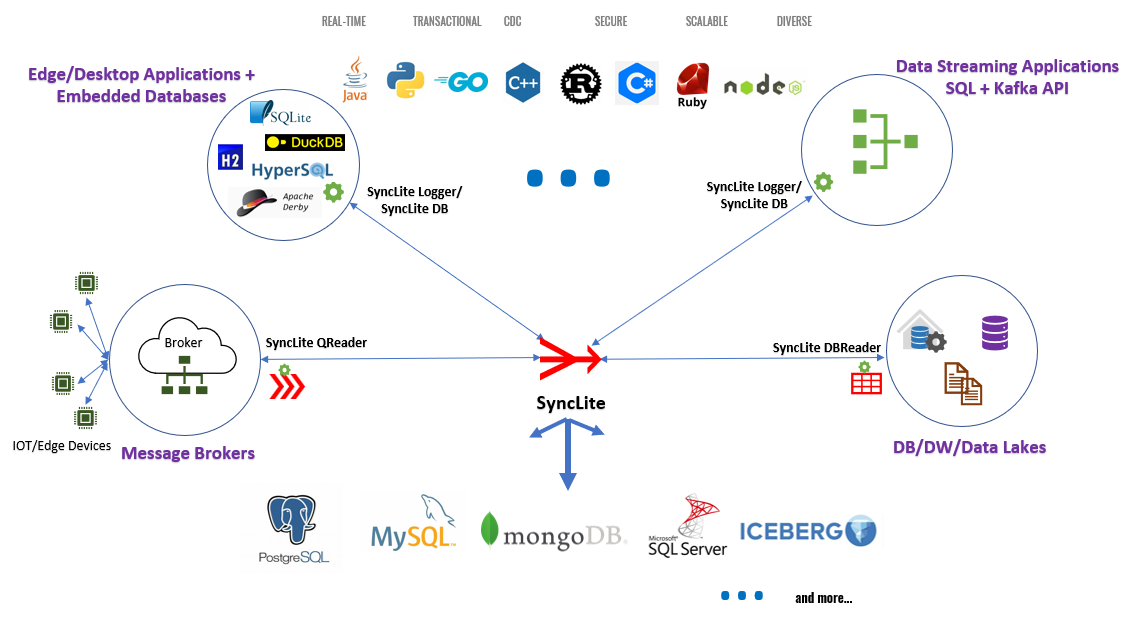

SyncLite is an open-source, low-code, comprehensive relational data consolidation platform enabling developers to rapidly build data intensive applications for edge, desktop and mobile environments. SyncLite enables real-time, transactional data replication and consolidation from various sources including edge/desktop applications using popular embedded databases (SQLite, DuckDB, Apache Derby, H2, HyperSQL), data streaming applications, IoT message brokers, traditional database systems(ETL) and more into a diverse array of databases, data warehouses, and data lakes, enabling AI and ML use-cases at edge and cloud.

SyncLite enables following scenarios for industry leading databases, data warehouse and data lakes.

SyncLite provides a novel CDC replication framework for embedded databases, helping developers quickly build data-intensive applications, including Gen AI Search and RAG applications, for edge, desktop, and mobile environments. It integrates seamlessly with popular embedded databases like SQLite, DuckDB, Apache Derby, H2, and HyperSQL (HSQLDB), enabling Change Data Capture (CDC), transactional, real-time data replication, and consolidation into industry-leading databases, data warehouses, and data lakes.

SyncLite Logger, an embeddable Java library (JDBC driver), that can be consumed by Java and Python applications for efficient data syncing by capturing all SQL transactions in log files and shipping these log files to configured staging storages.

SyncLite DB, a standalone sync-enabled database, accepting SQL requests in JSON format over HTTP, making it compatible with any programming language (Java, Python, C++, C#, Go, Rust, Ruby, Node.js etc.) and ideal for flexible, real-time data integration and consolidation, right from edge/desktop applications into final data destinations.

{Edge/Desktop Apps} + {SyncLite Logger + Embedded Databases} ---> {Staging Storage} ---> {SyncLite Consolidator} ---> {Destination DB/DW/DataLakes}

{Edge/Desktop Apps} ---> {SyncLite DB + Embedded Databases} ---> {Staging Storage} ---> {SyncLite Consolidator} ---> {Destination DB/DW/DataLakes}

Learn more:

https://www.synclite.io/synclite/sync-ready-apps

https://www.synclite.io/solutions/gen-ai-search-rag

SyncLite facilitates development of large-scale data streaming applications through SyncLite Logger, which offers both a Kafka Producer API and SQL API. This allows for the ingestion of massive amounts of data and provides the capability to query the ingested data using the SQL API within applications. Together, SyncLite Logger and SyncLite Consolidator enable seamless last-mile data integration from thousands of streaming application instances into a diverse array of final data destinations.

{Data Streaming Apps} + {SyncLite Logger} ---> {Staging Storage} ---> {SyncLite Consolidator} ---> {Destination DB/DW/DataLakes}

{Data Streaming Apps} ---> {SyncLite DB} ---> {Staging Storage} ---> {SyncLite Consolidator} ---> {Destination DB/DW/DataLakes}

Learn more: https://www.synclite.io/synclite/last-mile-streaming

Set up many-to-many, scalable database replication/migration/incremental ETL pipelines from a diverse range of source databases and raw data files into a diverse range of destinations.

{Source Databases} ---> {SyncLite DBReader} ---> {Staging Storage} ---> {SyncLite Consolidator} ---> {Destination DB/DW/DataLakes}

Learn More: https://www.synclite.io/solutions/smart-database-etl

Connect numerous MQTT brokers (IoT gateways) to one or more destination databases.

{IoT Message Brokers} ---> {SyncLite QReader} ---> {Staging Storage} ---> {SyncLite Consolidator} ---> {Destination DB/DW/DataLakes}

Learn More: https://www.synclite.io/solutions/iot-data-connector

SyncLite Logger is a JDBC driver, enables developers to rapidly build

-sync-enabled, robust, responsive, high-performance, low-latency, transactional, data intensive applications for edge/mobile/desktop platforms using their favorite embedded databases (SQLite, DuckDB, Apache Derby, H2, HyperSQL)

-large scale data streaming solutions for last mile data integrations into a wide range of industry leading databases, while offering ability to perform real-time analytics using the native embedded databases over streaming data, at the producer end of the pipelines.

SyncLite DB is a sync-enabled, single-node database server that wraps popular embedded databases like SQLite, DuckDB, Apache Derby, H2, and HyperSQL. Unlike the embeddable SyncLite Logger library for Java and Python applications, SyncLite DB acts as a standalone server, allowing your edge or desktop applications—regardless of the programming language—to connect and send SQL requests (wrapped in JSON format) over HTTP.

SyncLite Client is a command line tool to operate SyncLite devices, to execute SQL queries and workloads.

SyncLite DBReader enables data teams and data engineers to configure and orchestrate many-to-many, highly scalable, incremental/log-based database ETL/replication/migration jobs across a diverse array of databases, data warehouses and data lakes.

SyncLite QReader enables developers to integrate IoT data published to message queue brokers, into a diverse array of databases, data warehouses and data lakes, enabling real-time analytics and AI use cases at all three levels: edge, fog and cloud.

SyncLite Consolidator is the centralized application to all the reader/producer tools mentioned above, which receives and consolidates the incoming data and log files in real-time into one or more databases, data warehouses and data lakes of user’s choice. SyncLite Consolidator also offers additional features: table/column/value filtering and mapping, data type mapping, database trigger installation, fine-tunable writes, support for multiple destinations and more.

SyncLite JobMonitor enables managing, scheduling and monitoring all SyncLite jobs created on a given host.

SyncLite Validator is an E2E integration testing tool for SyncLite.

-

If you are using a pre-built release then ignore this section.

-

Install/Download Apache Maven(3.8.6 or above): https://maven.apache.org/download.cgi

-

If you opt to not use the deploy scripts generated in the release which download the prerequisite software : Apache Tomcat and OpenJDK, then manually install them

a. OpenJDK 11 : https://jdk.java.net/java-se-ri/11

b. Apache Tomcat 9.0.95 : https://tomcat.apache.org/download-90.cgi

-

Run following:

git clone --recurse-submodules [email protected]:syncliteio/SyncLite.git SyncLite cd SyncLite mvn -Drevision=oss clean install -

Release is created under SyncLite/target

The build process creates following release structure under SyncLite\target:

synclite-platform-<version>

|

|

--------lib

| |

| |

| --------logger

| | |

| | |

| | --------java

| | | |

| | | |

| | | --------synclite-logger-<version>.jar ==> SyncLite Logger JDBC driver, to be added as a depependency in your edge apps

| | |

| | |

| | --------synclite_logger.conf ==> A sample configuration file for SyncLite logger.

| |

| |

| --------consolidator

| | |

| | |

| | --------synclite-consolidator-<version>.war ==> SyncLite Consolidator application, to be deployed on an application server such as Tomcat on a centralized host

|

|

|

|

--------sample-apps

| |

| |

| --------jsp-servlet

| | |

| | |

| | --------web

| | |

| | |

| | --------src ==> Source code of a sample Jsp Servlet app that demonstrates usage of synclite-logger

| | |

| | |

| | --------target

| | |

| | |

| | --------synclite-sample-app-<version>.war

| |

| |

| --------java

| | |

| | |

| | --------*.java => Java source files demonstrating SyncLite logger usage.

| | |

| | |

| | --------README

| |

| |

| |

| |

| --------python

| |

| |

| --------*.py => Python source files demonstrating SyncLite logger usage.

| |

| |

| --------README

|

|

|

|

--------bin

| |

| |

| --------deploy.bat/deploy.sh ==> Deployment script for deploying SyncLite consolidator and sample application from the lib directory

| |

| |

| --------start.bat/start.sh ==> Launch script to start tomcat and the deployed SyncLite applications.

| |

| |

| --------docker-deploy.sh/docker-start.sh ==> Deployment and launch scripts for running SyncLite Consolidator inside a docker container.

| |

| |

| --------stage

| | |

| | |

| | --------sftp ==> Contains docker-deploy.sh,docker-start.sh, docker-stop.sh scripts to launch SFTP server as SyncLite stage

| | |

| | |

| | --------minio ==> Contains docker-deploy.sh, docker-start.sh, docker-stop.sh scripts to launch MinIO server as SyncLite stage

| |

| |

| |

| --------dst

| |

| |

| --------postgresql ==> Contains docker-deploy.sh,docker-start.sh scripts to launch PostgrSQL server as SyncLite destination DB

| |

| |

| --------mysql ==> Contains docker-deploy.sh, docker-start.shscripts to launch MySQL server as SyncLite destination DB

|

|

|

--------tools

|

|

--------synclite-client ==> Client tool to execute SQL operations on SyncLite databases/devices.

|

|

--------synclite-db ==> A standalone database server offering sync-enabled embedded databases for edge/desktop applications.

|

|

--------synclite-dbreader ==> Smart database ETL/Replication/Migration tool

|

|

--------synclite-qreader ==> Rapid IoT data connector tool

|

|

--------synclite-job-monitor ==> Job Monitor tool to manage, monitor and schedule SyncLite jobs.

|

|

--------synclite-validator ==> An E2E integration testing tool for SyncLite

NOTE: Below instructions enable a quick start and trial of SyncLite platform. For production usage, it is recommended to go through installation process to install OpenJDK11 and Tomcat9 (as a service) on your Windows/Ubuntu host.

-

Enter bin directory.

-

(One time) Run

deploy.bat(WINDOWS) /deploy.sh(UBUNTU) to deploy the SyncLite consolidator and a SyncLite sample application.OR Run

docker-deploy.sh(UBUNTU) to deploy a docker container for SyncLite platform.OR Manually deploy below war files on your tomcat server:

SyncLite\target\synclite-platform-dev\lib\consolidator\synclite-consolidator-oss.war,SyncLite\target\synclite-platform-dev\sample-apps\jsp-servlet\web\target\synclite-sample-app-oss.warSyncLite\target\synclite-platform-dev\tools\synclite-dbreader\synclite-dbreader-oss.warSyncLite\target\synclite-platform-dev\tools\synclite-dbreader\synclite-qreader-oss.warSyncLite\target\synclite-platform-dev\tools\synclite-dbreader\synclite-jobmonitor-oss.war

-

Run

start.bat(WINDOWS) /start.sh(UBUNTU) to start tomcat and the deployed SyncLite applications. (Please note the username/password for tomcat manager web console is synclite/synclite)OR Run

docker-start.shto run the docker container (Please check options passed to docker run command e.g. the home directory of the current user is mapped to/rootinside docker to persist all the SyncLite storage in the native host).OR manually start applications from your tomcat manager console.

-

Open tomcat manager console http://localhost:8080/manager (Use synclite/synclite as the default user/password when prompted as set by the deploy script). The manager web console will show all the SyncLite applications deployed.

-

Open http://localhost:8080/synclite-consolidator to launch SyncLite Consolidator application

-

Open http://localhost:8080/synclite-sample-app to launch SyncLite sample web application

-

Configure and start SyncLite consolidator job in the SyncLite Consolidator application. You can follow through the "Configure Job" wizard reviewing all the default configuration values. Create databases/devices of any type from the deployed sample web application and execute SQL workloads on several devices at once specifying the device index range. Observe data consolidator in the SyncLite Cosolidator dashboard. You can check device specific data consolidation progress on individual device pages (from "List Devices" page), query destination database on the "Analyze Data" page.

-

This release also comes with a CLI client for SyncLite under tools/synclite-client. You can run synclite-client.bat(WINDOWS)/synclite-client.sh (UBUNTU) to start the client tool and execute SQL operations which are not only executed/persisted on the native database but also consolidated by the SyncLite consolidator into destination DB.

- Usage 1 :

synclite-client.bat/synclite-client.sh ==> Will start with DB = <USER.HOME>/synclite/job1/db/test.db, DEVICE_TYPE = SQLITE, CONFIG = <USER.HOME>/synclite/db/synclite_logger.conf - Usage 2 :

synclite-client.bat/synclite-client.sh <path/to/db/file> --device-type <SQLITE|DUCKDB|DERBY|H2|HYPERSQL|STREAMING|SQLITE_APPENDER|DUCKDB_APPENDER|DERBY_APPENDER|H2_APPENDER|HYPERSQL_APPENDER> --synclite-logger-config <path/to/synclite/logger/config> --server <SyncLite DB Address> - Note: If --sever switch is specified then the client connects to SyncLite DB to execute SQL statements, else it usages embedded

SyncLite Loggerlibrary to directly operate on the devices.

- Usage 1 :

-

This release also comes with SyncLite DB server under tools/synclite-db. You can run synclite-db.bat(WINDOWS)/synclite-db.sh(UBUNTU) to start SyncLite DB server and connect to it using synclite-client to execute SQL operations which are not only executed/persisted on the specified embedded database but also consolidated by the SyncLite Consolidator onto the destination databases.

- Usage 1 :

synclite-db.bat/synclite-db.sh==> Will start SyncLite DB with default configurations - Usage 2 :

synclite-db.bat/synclite-db.sh --config <path/to/synclite-db/config>

- Usage 1 :

-

Use

stop.bat(Windows) /stop.sh(LINUX) to stop SyncLite consolidator job (if running) and tomcat. OR RUN docker-stop.sh to stop the docker container. -

Refer

sample_apps/javaandsamples_apps/pythonand use any of them as a starting point to build your own application. -

You can install/use a database of your choice and perform data consolidation to it (instead of the default SQLite destination): PostgreSQL, MySQL, MongoDB, SQLite, DuckDB.

-

This release also packages docker scripts to setup PostgreSQL and MySQL to serve as SyncLite destinations.

bin/dst/postgresqlcontainsdocker-deploy.sh,docker-start.shanddocker-stop.shbin/dst/mysqlcontainsdocker-deploy.sh,docker-start.shanddocker.stop.sh

-

You can deploy your applications on remote hosts/devices and share the local-stage-directory of your respective SyncLite applications with SyncLite Consolidator host via one of the following file staging storages:

- SFTP

- Amazon S3

- MinIO Object Storage Server

- Apache Kafka

- Microsoft OneDrive

- Google Drive

- NFS Sharing

- Local Network Sharing

Please check documentation for setting up these staging storages for SyncLite : https://www.synclite.io/resources/documentation

-

This release also packages docker scripts to setup SFTP and MinIO servers to serve as SyncLite stage.

bin/stage/sftpcontainsdocker-deploy.sh,docker-start.shanddocker-stop.shbin/stage/miniocontainsdocker-deploy.sh,docker-start.shanddocker-stop.shNOTE: These scripts contain default configurations. You must change usernames, passwords and setup any additional security mechanisms on top of these basic setups.

-

The SyncLite docker scripts

bin/docker-deploy.sh,bin/docker-start.sh,bin/docker-stop.shcontain two variables at the top to choose a stage and destination:-

STAGE : Set it to SFTP or MINIO.

-

DST : Set it to POSTGRESQL or MYSQL.

Once you set the STAGE and DST to appropriate values e.g. SFTP and POSTGRESQL, the

docker-deploy.shanddocker-start.shscripts will bring up docker containers for SyncLite consolidator, SFTP server and PostgreSQL server and you will be all set to configure and start a SyncLite consoldiator job be able to consolidate data into PostgreSQL server received from remote SyncLite applications configured to connect to the SFTP stage.

-

-

After a successful trial, if you need to perform another trial, stop the docker containers, and delete contents under

/home/syncliteto start a fresh trial of a different scenario etc. -

Open http://localhost:8080/synclite-dbreader (and open http://localhost:8080/synclite-consolidator) to setup database ETL/Replication/Migration pipelines.

-

Open http://localhost:8080/synclite-qreader (and open http://localhost:8080/synclite-consolidator) to setup rapid IoT pipelines.

-

Open http://localhost:8080/synclite-job-monitor to manage, monitor and schedule various SyncLite jobs.

Refer documentation at https://www.synclite.io/resources/documentation for more details.

NOTE : For production usage, it is recommended to install OpenJDK11 and Tomcat as a service (or any other application server of your choice) and deploy SyncLite consolidator web archive release, Please refer our documentation at www.synclite.io for detailed installation steps for Windows and Ubuntu.

Add synclite-logger-<version>.jar file created as part of the above build as a dependency in your application.

Refer src/main/resources/synclite_logger.conf file for all available configuration options for SyncLite Logger. Refer "SyncLite Logger Configuration" section in the documentation at https://www.synclite.io/resources/documentation for more details about all configuration options.

Refer below code samples to build applications using SyncLite Logger.

Transactional devices (SQLite, DuckDB, Apache Derby, H2, HyperSQL) support all database operations and perform transactional logging of all the DDL and DML operations performed by the application. These enable developers to build use cases such as building data-intensive sync-ready applications for edge, edge + cloud GenAI search and RAG applications, native SQL (hot) hot data stores, SQL application caches, edge enablement of cloud databases and more.

package testApp;

import java.nio.file.Path;

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.PreparedStatement;

import java.sql.SQLException;

import java.sql.Statement;

import io.synclite.logger.*;

public class TestTransactionalDevice {

public static Path syncLiteDBPath;

public static void appStartup() throws SQLException, ClassNotFoundException {

syncLiteDBPath = Path.of(System.getProperty("user.home"), "synclite", "db");

Class.forName("io.synclite.logger.SQLite");

//

//////////////////////////////////////////////////////

//For other types of transactional devices :

//DuckDB : Class.forName("io.synclite.logger.DuckDB");

//Apache Derby : Class.forName("io.synclite.logger.Derby");

//H2 : Class.forName("io.synclite.logger.H2");

//HyperSQL : Class.forName("io.synclite.logger.HyperSQL");

//////////////////////////////////////////////////////

//

Path dbPath = syncLiteDBPath.resolve("test_tran.db");

SQLite.initialize(dbPath, syncLiteDBPath.resolve("synclite_logger.conf"));

//

//////////////////////////////////////////////////////

//For other types of transactional devices :

//DuckDB : DuckDB.initialize(dbPath, syncLiteDBPath.resolve("synclite_logger.conf"));

//Apache Derby : Derby.initialize(dbPath, syncLiteDBPath.resolve("synclite_logger.conf"));

//H2 : H2.initialize(dbPath, syncLiteDBPath.resolve("synclite_logger.conf"));

//HyperSQL : HyperSQL.initialize(dbPath, syncLiteDBPath.resolve("synclite_logger.conf"));

//////////////////////////////////////////////////////

//

}

public void myAppBusinessLogic() throws SQLException {

//

//Some application business logic

//

//Perform some database operations

try (Connection conn = DriverManager.getConnection("jdbc:synclite_sqlite:" + syncLiteDBPath.resolve("test_sqlite.db"))) {

//

//////////////////////////////////////////////////////////////////

//For other types of transactional devices use following connection strings :

//For DuckDB : jdbc:synclite_duckdb:<db_path>

//For Apache Derby : jdbc:synclite_derby:<db_path>

//For H2 : jdbc:synclite_h2:<db_path>

//For HyperSQL : jdbc:synclite_hsqldb:<db_path>

///////////////////////////////////////////////////////////////////

//

try (Statement stmt = conn.createStatement()) {

//Example of executing a DDL : CREATE TABLE.

//You can execute other DDL operations : DROP TABLE, ALTER TABLE, RENAME TABLE.

stmt.execute("CREATE TABLE IF NOT EXISTS feedback(rating INT, comment TEXT)");

//Example of performing INSERT

stmt.execute("INSERT INTO feedback VALUES(3, 'Good product')");

}

//Example of setting Auto commit OFF to implement transactional semantics

conn.setAutoCommit(false);

try (Statement stmt = conn.createStatement()) {

//Example of performing basic DML operations INSERT/UPDATE/DELETE

stmt.execute("UPDATE feedback SET comment = 'Better product' WHERE rating = 3");

stmt.execute("INSERT INTO feedback VALUES (1, 'Poor product')");

stmt.execute("DELETE FROM feedback WHERE rating = 1");

}

conn.commit();

conn.setAutoCommit(true);

//Example of Prepared Statement functionality for bulk insert.

try(PreparedStatement pstmt = conn.prepareStatement("INSERT INTO feedback VALUES(?, ?)")) {

pstmt.setInt(1, 4);

pstmt.setString(2, "Excellent Product");

pstmt.addBatch();

pstmt.setInt(1, 5);

pstmt.setString(2, "Outstanding Product");

pstmt.addBatch();

pstmt.executeBatch();

}

}

//Close SyncLite database/device cleanly.

SQLite.closeDevice(Path.of("test_sqlite.db"));

//

///////////////////////////////////////////////////////

//For other types of transactional devices :

//DuckDB : DuckDB.closeDevice

//Apache Derby : Derby.closeDevice

//H2 : H2.closeDevice

//HyperSQL : HyperSQL.closeDevice

//////////////////////////////////////////////////////

//

//You can also close all open databases in a single SQL : CLOSE ALL DATABASES

}

public static void main(String[] args) throws ClassNotFoundException, SQLException {

appStartup();

TestTransactionalDevice testApp = new TestTransactionalDevice();

testApp.myAppBusinessLogic();

}

}

import jaydebeapi

props = {

"config": "synclite_logger.conf",

"device-name" : "tran1"

}

conn = jaydebeapi.connect("io.synclite.logger.SQLite",

"jdbc:synclite_duckdb:c:\\synclite\\python\\data\\test_sqlite.db",

props,

"synclite-logger-<version>.jar",)

#//

#////////////////////////////////////////////////////////////////

#For other types of transactional devices use following are the class names and connection strings :

#For DuckDB - Class : io.synclite.logger.DuckDB, Connection String : jdbc:synclite_duckdb:<db_path>

#For Apache Derby - Class : io.synclite.logger.Derby, Connection String : jdbc:synclite_derby:<db_path>

#For H2 - Class : io.synclite.logger.H2, Connection String : jdbc:synclite_h2:<db_path>

#For HyperSQL - Class : io.synclite.logger.HyperSQL, Connection String : jdbc:synclite_hsqldb:<db_path>

#/////////////////////////////////////////////////////////////////

#//

curs = conn.cursor()

#Example of executing a DDL : CEATE TABLE.

#You can execute other DDL operations : DROP TABLE, ALTER TABLE, RENAME TABLE.

curs.execute('CREATE TABLE IF NOT EXISTS feedback(rating INT, comment TEXT)')

#Example of performing basic DML operations INSERT/UPDATE/DELETE

curs.execute("insert into feedback values (3, 'Good product')")

#Example of setting Auto commit OFF to implement transactional semantics

conn.jconn.setAutoCommit(False)

curs.execute("update feedback set comment = 'Better product' where rating = 3")

curs.execute("insert into feedback values (1, 'Poor product')")

curs.execute("delete from feedback where rating = 1")

conn.commit()

conn.jconn.setAutoCommit(True)

#Example of Prepared Statement functionality for bulk insert.

args = [[4, 'Excellent product'],[5, 'Outstanding product']]

curs.executemany("insert into feedback values (?, ?)", args)

#Close SyncLite database/device cleanly.

curs.execute("close database c:\\synclite\\python\\data\\test_sqlite.db");

#You can also close all open databases in a single SQL : CLOSE ALL DATABASES

Appender devices (SQLiteAppender, DuckDBAppender, DerbyAppender, H2Appender, HyperSQLAppender) support all DDL operations and Prepared Statement based INSERT operations, are highly optimized for high speed concurrent batched data ingestion, performing logging of ingested data. Unlike transactional devices, appender devices only allow INSERT DML operations (UPDATE and DELETE are not allowed). Appender devices enable developers to build high volume streaming applications enabled with last mile data integration from thousands of edge points into centralized database destinations as well as in-app analytics by enabling fast read access to ingested data from the underlying local embedded databases storing the ingested/streamed data.

package testApp;

import java.nio.file.Path;

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.PreparedStatement;

import java.sql.SQLException;

import java.sql.Statement;

import io.synclite.logger.*;

public class TestAppenderDevice {

public static Path syncLiteDBPath;

public static void appStartup() throws SQLException, ClassNotFoundException {

syncLiteDBPath = Path.of(System.getProperty("user.home"), "synclite", "db");

Class.forName("io.synclite.logger.SQLiteAppender");

//

//////////////////////////////////////////////////////

//For other types of appender devices :

//DuckDB : Class.forName("io.synclite.logger.DuckDBAppender");

//Apache Derby : Class.forName("io.synclite.logger.DerbyAppender");

//H2 : Class.forName("io.synclite.logger.H2Appender");

//HyperSQL : Class.forName("io.synclite.logger.HyperSQLAppender");

//////////////////////////////////////////////////////

//

Path dbPath = syncLiteDBPath.resolve("test_appender.db");

SQLiteAppender.initialize(dbPath, syncLiteDBPath.resolve("synclite_logger.conf"));

}

public void myAppBusinessLogic() throws SQLException {

//

// Some application business logic

//

// Perform some database operations

try (Connection conn = DriverManager.getConnection("jdbc:synclite_sqlite_appender:" + syncLiteDBPath.resolve("test_appender.db"))) {

//

//////////////////////////////////////////////////////////////////

//For other types of appender devices use following connection strings :

//For DuckDBAppender : jdbc:synclite_duckdb_appender:<db_path>

//For DerbyAppender : jdbc:synclite_derby_appender:<db_path>

//For H2Appender : jdbc:synclite_h2_appender:<db_path>

//For HyperSQLAppender : jdbc:synclite_hsqldb_appender:<db_path>

///////////////////////////////////////////////////////////////////

//

try (Statement stmt = conn.createStatement()) {

// Example of executing a DDL : CREATE TABLE.

// You can execute other DDL operations : DROP TABLE, ALTER TABLE, RENAME TABLE.

stmt.execute("CREATE TABLE IF NOT EXISTS feedback(rating INT, comment TEXT)");

}

//

// Example of Prepared Statement functionality for bulk insert.

// Note that Appender Devices allows all DDL operations, INSERT INTO DML operations (UPDATES and DELETES are not allowed) and SELECT queries.

//

try (PreparedStatement pstmt = conn.prepareStatement("INSERT INTO feedback VALUES(?, ?)")) {

pstmt.setInt(1, 4);

pstmt.setString(2, "Excellent Product");

pstmt.addBatch();

pstmt.setInt(1, 5);

pstmt.setString(2, "Outstanding Product");

pstmt.addBatch();

pstmt.executeBatch();

}

}

// Close SyncLite database/device cleanly.

SQLiteAppender.closeDevice(Path.of("test_appender.db"));

//

///////////////////////////////////////////////////////

//For other types of appender devices :

//DuckDBAppender : DuckDBAppender.closeDevice

//DerbyAppender : DerbyAppender.closeDevice

//H2Appender : H2Appender.closeDevice

//HyperSQLAppender : HyperSQLAppender.closeDevice

//////////////////////////////////////////////////////

//

// You can also close all open databases/devices in a single SQL : CLOSE ALL

// DATABASES

}

public static void main(String[] args) throws ClassNotFoundException, SQLException {

appStartup();

TestAppenderDevice testApp = new TestAppenderDevice();

testApp.myAppBusinessLogic();

}

}

import jaydebeapi

props = {

"config": "synclite_logger.conf",

"device-name" : "appender1"

}

conn = jaydebeapi.connect("io.synclite.logger.SQLiteAppender",

"jdbc:synclite_sqlite_appender:c:\\synclite\\python\\data\\test_appender.db",

props,

"synclite-logger-<version>.jar",)

#//

#////////////////////////////////////////////////////////////////

#For other types of appender devices use following are the class names and connection strings :

#For DuckDBAppender - Class : io.synclite.logger.DuckDBAppender, Connection String : jdbc:synclite_duckdb_appender:<db_path>

#For DerbyAppender - Class : io.synclite.logger.DerbyAppender, Connection String : jdbc:synclite_derby_appender:<db_path>

#For H2Appender - Class : io.synclite.logger.H2Appender, Connection String : jdbc:synclite_h2_appender:<db_path>

#For HyperSQLAppender - Class : io.synclite.logger.HyperSQLAppender, Connection String : jdbc:synclite_hsqldb_appender:<db_path>

#/////////////////////////////////////////////////////////////////

#//

curs = conn.cursor()

#Example of executing a DDL : CREATE TABLE.

#You can execute other DDL operations : DROP TABLE, ALTER TABLE, RENAME TABLE.

curs.execute('CREATE TABLE IF NOT EXISTS feedback(rating INT, comment TEXT)')

#Example of Prepared Statement functionality for bulk insert.

args = [[4, 'Excellent product'],[5, 'Outstanding product']]

curs.executemany("insert into feedback values (?, ?)", args)

#Close SyncLite database/device cleanly.

curs.execute("close database c:\\synclite\\python\\data\\test_appender.db");

#You can also close all open databases in a single SQL : CLOSE ALL DATABASES

Streaming device allows all DDL operations (as supported by SQLite) and Prepared Statement based INSERT operations (UPDATE and DELETE are not allowed) to allow high speed concurrent batched data ingestion, performing logging and streaming of the ingested data. Streaming device enable developers to build high volume data streaming applications enabled with last mile data integration from thousands of edge applications into one or more centralized databases/data warehouses/data lakes.

package testApp;

import java.nio.file.Path;

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.PreparedStatement;

import java.sql.SQLException;

import java.sql.Statement;

import io.synclite.logger.*;

public class TestStreamingDevice {

public static Path syncLiteDBPath;

public static void appStartup() throws SQLException, ClassNotFoundException {

syncLiteDBPath = Path.of(System.getProperty("user.home"), "synclite", "db");

Class.forName("io.synclite.logger.Streaming");

Path dbPath = syncLiteDBPath.resolve("t_str.db");

Streaming.initialize(dbPath, syncLiteDBPath.resolve("synclite_logger.conf"));

}

public void myAppBusinessLogic() throws SQLException {

//

// Some application business logic

//

// Perform some database operations

try (Connection conn = DriverManager

.getConnection("jdbc:synclite_streaming:" + syncLiteDBPath.resolve("t_str.db"))) {

try (Statement stmt = conn.createStatement()) {

// Example of executing a DDL : CREATE TABLE.

// You can execute other DDL operations : DROP TABLE, ALTER TABLE, RENAME TABLE.

stmt.execute("CREATE TABLE IF NOT EXISTS feedback(rating INT, comment TEXT)");

}

// Example of Prepared Statement functionality for bulk insert.

try (PreparedStatement pstmt = conn.prepareStatement("INSERT INTO feedback VALUES(?, ?)")) {

pstmt.setInt(1, 4);

pstmt.setString(2, "Excellent Product");

pstmt.addBatch();

pstmt.setInt(1, 5);

pstmt.setString(2, "Outstanding Product");

pstmt.addBatch();

pstmt.executeBatch();

}

}

// Close SyncLite database/device cleanly.

Streaming.closeDevice(Path.of("t_str.db"));

// You can also close all open databases/devices in a single SQL : CLOSE ALL

// DATABASES

}

public static void main(String[] args) throws ClassNotFoundException, SQLException {

appStartup();

TestStreamingDevice testApp = new TestStreamingDevice();

testApp.myAppBusinessLogic();

}

}

import jaydebeapi

props = {

"config": "synclite_logger.conf",

"device-name" : "streaming1"

}

conn = jaydebeapi.connect("io.synclite.logger.Streaming",

"jdbc:synclite_streaming:c:\\synclite\\python\\data\\t_str.db",

props,

"synclite-logger-<version>.jar",)

curs = conn.cursor()

#Example of executing a DDL : CEATE TABLE.

#You can execute other DDL operations : DROP TABLE, ALTER TABLE, RENAME TABLE.

curs.execute('CREATE TABLE IF NOT EXISTS feedback(rating INT, comment TEXT)')

#Example of Prepared Statement functionality for bulk insert.

args = [[4, 'Excellent product'],[5, 'Outstanding product']]

curs.executemany("insert into feedback values (?, ?)", args)

#Close SyncLite database/device cleanly.

curs.execute("close database c:\\synclite\\python\\data\\t_str.db");

#You can also close all open databases in a single SQL : CLOSE ALL DATABASES

package testApp;

import io.synclite.logger.*;

public class TestKafkaProducer {

public static void main(String[] args) throws Exception {

Properties props = new Properties();

//

//Set properties to use a staging storage of your choice e.g. S3, MinIO, SFTP etc.

//where SyncLite logger will ship log files continuously for consumption by SyncLite consolidator

//

Producer<String, String> producer = new io.synclite.logger.KafkaProducer(props);

ProducerRecord<String, String> record = new ProducerRecord<>("test", "key", "value");

//

//You can use same or different KafkaProducer objects to ingest data concurrently over multiple theads.

//

producer.send(record);

produer.close();

}

SyncLite DB is a sync-enabled, single-node database server that wraps popular embedded databases like SQLite, DuckDB, Apache Derby, H2, and HyperSQL. Unlike the embeddable SyncLite Logger library for Java and Python applications, SyncLite DB acts as a standalone server, allowing your edge or desktop applications—regardless of the programming language—to connect and send/post SQL requests (wrapped in JSON format) via a REST API. This makes it an ideal solution for seamless, real-time data synchronization in diverse environments.

-

Go to the directory

synclite-platform-<version>\tools\synclite-db -

Check the configurations in synclite-db.conf and adjust them as per your needs.

-

Run

synclite-db.bat --config synclite-db.conf( ORsynclite-db.sh --config synclite-db.confon linux). This starts the SyncLite DB server listening at the specified address. -

An application in your favoirite programming language can establish a connection with the SyncLite DB server at the specified address and send requests in JSON format as below

- Connect and initialize a device

Request

{ "db-type" : "SQLITE" "db-path" : "C:\synclite\users\bob\synclite\job1\test.db" "synclite-logger-config" : "C:\synclite\users\bob\synclite\job1\synclite_logger.conf" "sql" : "initialize" }Response from Server

{ "result" : true "message" : "Database initialized successfully" }- Send a sql command to create a table

Request

{ "db-path" : "C:\synclite\users\bob\synclite\job1\test.db" "sql" : "CREATE TABLE IF NOT EXISTS(a INT, b INT)" }Response from Server

{ "result" : "true" "message" : "Update executed successfully, rows affected: 0" }- Send a request to perform a batched insert in the created table

Request

{ "db-path" : "C:\synclite\users\bob\synclite\job1\test.db" "sql" : "INSERT INTO t1(a,b) VALUES(?, ?)" "arguments" : [[1, "one"], [2, "two"]] }Response from Server

{ "result" : "true" "message" : "Batch executed successfully, rows affected: 2" }- Send a request to begin a transaction on database

Request

{ "db-path" : "C:\synclite\users\bob\synclite\job1\test.db" "sql" : "begin" }Response from Server

{ "result" : "true" "message" : "Transaction started successfully" "txn-handle": "f47ac10b-58cc-4372-a567-0e02b2c3d479" }- Send a request to execute a sql inside started transaction

Request

{ "db-path" : "C:\synclite\users\bob\synclite\job1\test.db" "sql" : "INSERT INTO t1(a,b) VALUES(?, ?)" "txn-handle": "f47ac10b-58cc-4372-a567-0e02b2c3d479" "arguments" : [[3, "three"], [4, "four"]] }Response from Server

{ "result" : "true" "message" : "Batch executed successfully, rows affected: 2" }- Send a request to commit a transaction

Request

{ "db-path" : "C:\synclite\users\bob\synclite\job1\test.db" "txn-handle": "f47ac10b-58cc-4372-a567-0e02b2c3d479" "sql" : "commit" }Response from Server

{ "result" : "true" "message" : "Transaction committed successfully" }- Send a request to close database Request

{ "db-path" : "C:\synclite\users\bob\synclite\job1\test.db" "sql" : "close" }Response from Server

{ "result" : "true" "message" : "Database closed successfully" } -

SyncLite DB (internally leveraging SyncLite Logger), creates a device stage directory at configured stage path with sql logs created for each device. These device stage directories are continuously synchronized with SyncLite consolidator for consolidating them into final destination databases.

-

Several such hosts, each running SyncLite DB, each of them creating several SyncLite databases/devices (i.e. embedded databases), can synchornize these embedded databases in real-time with a centralized SyncLite consolidator that aggregates the incoming data and changes, in real-time, into configured destination databases.

SyncLite Validator is a GUI based tool with a war file deployed on app server, it can be launched at http://localhost:8080/synclite-validator. A test job can be configured and run to execute all the end to end integration tests which validate data consolidation functionality for various SyncLite device types.

- SyncLite Logger is is published as maven dependency :

<!-- https://mvnrepository.com/artifact/io.synclite/synclite-logger --> <dependency> <groupId>io.synclite</groupId> <artifactId>synclite-logger</artifactId> <version>#LatestVersion#</version> </dependency> - OR You can directly download the latest published synclite-logger-.jar from : https://github.com/syncliteio/SyncLiteLoggerJava/blob/main/src/main/resources/ and add it as a dependency in your applications.

-

A docker image of SyncLite Consolidator is available on docker hub : https://hub.docker.com/r/syncliteio/synclite-consolidator

-

OR a release zip file can be downloaded from this GitHub Repo : https://github.com/syncliteio/SyncLite/releases

- Edge Applications(Java/Python) + SyncLite Logger (wrapping embedded databases :SQLite, DuckDB, Apache Derby, H2, HyperSQL)

- Edge Applications (any programming language) + SyncLite DB (wrapping embedded databases :SQLite, DuckDB, Apache Derby, H2, HyperSQL)

- Databases : PostgreSQL, MySQL, MongoDB, SQLite

- Message Brokers : Eclipse Mosquitto MQTT broker

- Data Files : CSV ( stored on FS/S3/MinIO)

- Local FS

- SFTP

- S3

- MinIO

- Kafka

- Microsoft OneDrive

- Google Drive

- PostgreSQL

- MySQL

- MongoDB

- Microsoft SQL Server

- Apache Iceberg

- ClickHouse

- FerretDB

- SQLite

- DuckDB

SyncLite is backed by patented technlogy, more info : https://www.synclite.io/resources/patent

Join Slack Channel for support and discussions.

Contact: [email protected]