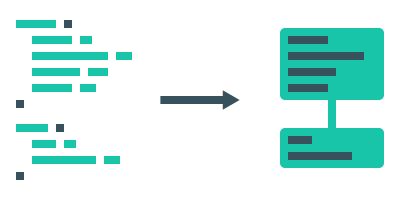

Instantly create or update a SQL database from a GraphQL API schema.

- Installation

- Programmatic Usage

- CLI Usage

- Cookbook

- Custom Scalar Mapping

- Custom logic with plugins

- Database compatibility

- Roadmap

npm i graphql-migrateThe migrate methods is able to create and update tables and columns. It will execute those steps:

- Read your database and construct an abstraction.

- Read your GraphQL schema and turn it to an equivalent abstraction.

- Compare both abstractions and generate database operations.

- Convert to SQL and execute the queries from operations using knex.

All the operations executed on the database will be wrapped in a transaction: if an error occurs, it will be fully rollbacked to the initial state.

migrate has the following arguments:

config: a knex config object to connect to the database.schema: a GraphQL schema object. You can usebuildSchemafromgraphql.options:dbSchemaName(default:'public'): table schema:<schemaName>.<tableName>.dbTablePrefix(default:''): table name prefix:<prefix><tableName>.dbColumnPrefix(default:''): column name prefix:<prefix><columnName>.updateComments(default:false): by default,migratewon't overwrite comments on table and columns. This forces comment overwritting.lowercaseNames(default:true): default table and column names will be lowercase.debug(default:false): displays debugging informations and SQL queries.

Example:

import { buildSchema } from 'graphql'

import { migrate } from 'graphql-migrate'

const config = {

client: 'pg',

connection: {

host: 'localhost',

user: 'some-user',

password: 'secret-password',

database: 'my-app',

},

}

const schema = buildSchema(`

type User {

id: ID!

name: String

messages: [Message]

contacts: [User]

}

type Message {

id: ID!

user: User

}

`)

migrate(config, schema, {

// Additional options here

}).then(() => {

console.log('Your database is up-to-date!')

})Available soon!

Schema annotations are parsed using graphql-annotations.

"""

A user.

"""

type User {

id: ID!

"""

Display name.

"""

name: String!

}"""

@db.skip

"""

type MutationResult {

success: Boolean!

}

type OtherType {

id: ID!

"""

@db.skip

"""

computedField: String

}"""

@db.oldNames: ['user']

"""

type People {

id: ID!

"""

@db.oldNames: ['name']

"""

nickname: String!

}type User {

"""

Not null

"""

name: String!

"""

Nullable

"""

nickname: String

}type User {

"""

@db.default: true

"""

someOption: Boolean

}By default, id fields of type ID will be the primary key on the table:

type User {

"""

This will get a primary index

"""

id: ID!

email: String!

}In this example, no primary key will be generated automatically:

type User {

"""

This will NOT get a primary index

"""

foo: ID!

"""

Neither will this

"""

id: String!

}You can disable the automatic primary key:

type User {

"""

@db.primary: false

"""

id: ID!

email: String!

}In this example, the primary key will be on email instead of id:

type User {

id: ID!

"""

@db.primary

"""

email: String!

}type User {

id: ID!

"""

@db.index

"""

email: String!

}type User {

"""

@db.index

"""

id: String!

"""

@db.index

"""

email: String!

}type User {

"""

@db.index: 'myIndex'

"""

email: String!

"""

@db.index: 'myIndex'

"""

name: String!

}You can also specify an index type on PostgresSQL or MySQL:

type User {

"""

@db.index: { name: 'myIndex', type: 'hash' }

"""

email: String!

"""

You don't need to specify the type again.

@db.index: 'myIndex'

"""

name: String!

}type User {

id: ID!

"""

@db.unique

"""

email: String!

}"""

@db.name: 'people'

"""

type User {

"""

@db.name: 'uuid'

"""

id: ID!

}type User {

"""

@db.type: 'string'

@db.length: 36

"""

id: ID!

}See knex schema builder methods for the supported types.

type User {

id: ID!

"""

@db.type: 'json'

"""

names: [String]

}You can set the mapListToJson option to automatically map scalar and enum lists to JSON:

const schema = buildSchema(`

type User {

names: [String]

}

`)

const adb = await generateAbstractDatabase(schema, {

mapListToJson: true,

})type User {

id: ID!

messages: [Message]

}

type Message {

id: ID!

user: User

}This will create the following tables:

{

user: {

id: uuid primary

},

message: {

id: uuid primary

user_foreign: uuid foreign key references 'user.id'

}

}type User {

id: ID!

"""

@db.manyToMany: 'users'

"""

messages: [Message]

}

type Message {

id: ID!

"""

@db.manyToMany: 'messages'

"""

users: [User]

}This will create an additional join table:

{

message_users_join_user_messages: {

users_foreign: uuid foreign key references 'message.id',

messages_foreign: uuid foreign key references 'user.id',

}

}type User {

id: ID!

contacts: [User]

}This will create an additional join table:

{

user_contacts_join_user_contacts: {

id_foreign: uuid foreign key references 'user.id',

id_foreign_other: uuid foreign key references 'user.id',

}

}To customize the scalar mapping, you can provide a function on the scalarMap option that gets field information and that returns a TableColumnDescriptor or null. Here is its signature:

type ScalarMap = (

field: GraphQLField<any, any>,

scalarType: GraphQLScalarType | null,

annotations: any,

) => TableColumnTypeDescriptor | nullHere is the TableColumnTypeDescriptor interface:

interface TableColumnTypeDescriptor {

/**

* Knex column builder function name.

*/

type: string

/**

* Builder function arguments.

*/

args: any[]

}Example:

migrate(config, schema, {

scalarMap: (field, scalarType, annotations) => {

if (scalarType && scalarType.name === 'Timestamp') {

return {

type: 'timestamp',

// useTz, precision

args: [true, undefined],

}

}

if (field.name === 'id' || annotations.type === 'uuid') {

return {

type: 'uuid',

args: [],

}

}

return null

}

})It's possible to write custom queries to be executed during migrations using Plugins.

Currently a plugin can only declare tap on the Writer system, with the write and tap methods:

import { MigratePlugin } from 'graphql-migrate'

class MyPlugin extends MigratePlugin {

write ({ tap }) {

tap('op-type', 'before', (op, transaction) => {

// or 'after'

})

}

}The arguments are:

operation: string, can be one of the following:table.createtable.renametable.comment.settable.droptable.index.createtable.index.droptable.primary.settable.unique.createtable.unique.droptable.foreign.createtable.foreign.dropcolumn.createcolumn.renamecolumn.altercolumn.drop

type: 'before' | 'after'callback: functionwhich get those parameters:operation: the operation object (see Operation.d.ts)transaction: the Knex SQL transaction

Then, instanciate the plugin in the plugins option array of the migrate method.

For example, let's say we have the following schema:

// old schema

const schema = buildSchema(`

type User {

id: ID!

fname: String

lname: String

}

`)Now we want to migrate the user table from two columns fname and lname into one:

fullname = fname + ' ' + lnameHere is the example code to achieve this:

import { buildSchema } from 'graphql'

import { migrate, MigratePlugin } from 'graphql-migrate'

const schema = buildSchema(`

type User {

id: ID!

"""

@db.oldNames: ['lname']

"""

fullname: String

}

`)

class MyPlugin extends MigratePlugin {

write ({ tap }) {

tap('column.drop', 'before', async (op, transaction) => {

// Check the table and column

if (op.table === 'user' && op.column === 'fname') {

// Update the users lname with fname + ' ' + lname

const users = await transaction

.select('id', 'fname', 'lname')

.from('user')

for (const user of users) {

await transaction('user')

.where({ id: user.id })

.update({

lname: `${user.fname} ${user.lname}`,

})

}

}

})

}

}

migrate(config, schema, {

plugins: [

new MyPlugin(),

],

})Let's describe what's going on -- we:

- Remove the

fnamefield from the schema. - Rename

lnametofullnamein the schema. - Annotate the

fullnamefield to indicate it's the new name oflname. - We declare a plugin that tap into the

column.dropwrite operation. - In this hook, we read the users and update each one of them to merge the two columns into

lnamebefore thefnamecolumn is dropped.

🙂 Contributions are welcome for SQL queries or testing!

| Icon | Meaning |

|---|---|

| ✔️ | Supported |

| ❓ | Not tested |

| - | Not implemented |

| ❌ | Not supported |

| Operation | pg | mysql | mssql | oracle | sqlite3 |

|---|---|---|---|---|---|

| Read tables | ✔️ | ❓ | ❓ | ❓ | ❓ |

| Read table comments | ✔️ | ❓ | - | - | ❌ |

| Read columns | ✔️ | ❓ | ❓ | ❓ | ❓ |

| Read column types | ✔️ | ❓ | ❓ | ❓ | ❓ |

| Read column comments | ✔️ | - | - | - | ❌ |

| Read column default values | ✔️ | ❓ | ❓ | ❓ | ❓ |

| Read foreign keys | ✔️ | - | - | - | - |

| Read primary keys | ✔️ | - | - | - | - |

| Read index | ✔️ | - | - | - | - |

| Read unique constraint | ✔️ | - | - | - | - |

| Write tables | ✔️ | ❓ | ❓ | ❓ | ❓ |

| Write table comments | ✔️ | ❓ | ❓ | ❓ | ❌ |

| Write columns | ✔️ | ❓ | ❓ | ❓ | ❓ |

| Write column comments | ✔️ | ❓ | ❓ | ❓ | ❌ |

| Write foreign keys | ✔️ | ❓ | ❓ | ❓ | ❓ |

| Write primary keys | ✔️ | ❓ | ❓ | ❓ | ❓ |

| Write index | ✔️ | ❓ | ❓ | ❓ | ❓ |

| Write unique constraint | ✔️ | ❓ | ❓ | ❓ | ❓ |