- XNLI is an evaluation corpus for language transfer and cross-lingual sentence classification in 15 languages.

- mT5 is pretrained on the mC4 corpus, covering 101 languages

- mT5 was only pre-trained on mC4 excluding any supervised training. Therefore, this model has to be fine-tuned before it is useable on a downstream task. For Example: Language Classification

datafolder contains thexnli.test.tsvandxnli.dev.tsv. These files can also be downloaded from here.outputfolder contains the outputs produced by the trained model. I trained the model on a TPU, GPU can also be used(slower) for fine tuning.modelsfolder contains the model checkpoints and latest model.- The trained model can be found on this drive link.

- After downloading the trained model, extract it here in the file structure and rename the folder as

models

-

pip install -r requirements.txt

- Open the

XNLI_MT5Small_Finetunednotebook. Run the cells one by one. - If using TPU, set the flag

ON_TPUto true. - Also if using TPU, set the

MAIN_DIRandPATH_TO_DATAto your respective GCS Bucket - If you are not training the model again, download the model from drive link. and run the last cell for the predictions.

- The trained model can be found on this drive link.

- Extract the model and rename the folder as

models/. - Note: Make sure that the task is registered in the

TaskRegistry

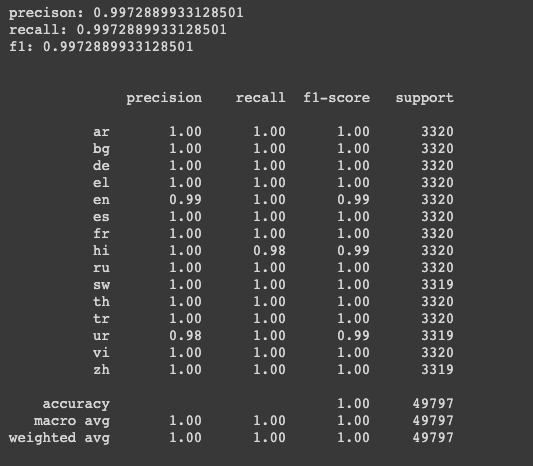

The model on following specification produced the following results.

- Learning Rate = 0.003

- Batch Size = 32 if GPU or else 128 if TPU

- EPOCH = 5

- 100% correct predictions on custom data.

inputs = [

"चलो पार्क चलते हैं",

"Hãy đến công viên",

"Vamos a aparcar",

"Пойдем в парк",

]b'hi'

b'vi'

b'es'

b'ru'

I was only able to train a single model because of my GCP limitation. Therefore, I couldn't thoroughly evaluate the model on different specs.