Code | Paper | Project Page | Blog | Weights

Tristan Stevens,

Hans van Gorp,

Can Meral,

Junseob Shin,

Jason Yu,

Jean-Luc Robert,

Ruud van Sloun

Official repository of the Removing Structured Noise with Diffusion Models paper. The joint posterior sampling functions for diffusion models proposed in the paper can be found in sampling.py and guidance.py. For the interested reader, a more in depth explanation of the method and underlying principles can be found here. Any information on how to setup the code and run inference can be found in the getting started section of this README.

If you find the code useful for your research, please cite the paper:

@article{stevens2023removing,

title={Removing Structured Noise with Diffusion Models},

author={Stevens, Tristan S. W. and van Gorp, Hans

and Meral, Faik C. and Shin, Junseob and Yu, Jason

and Robert, Jean-Luc and van Sloun, Ruud J. G.},

journal={arXiv preprint arXiv:2302.05290},

year={2023}

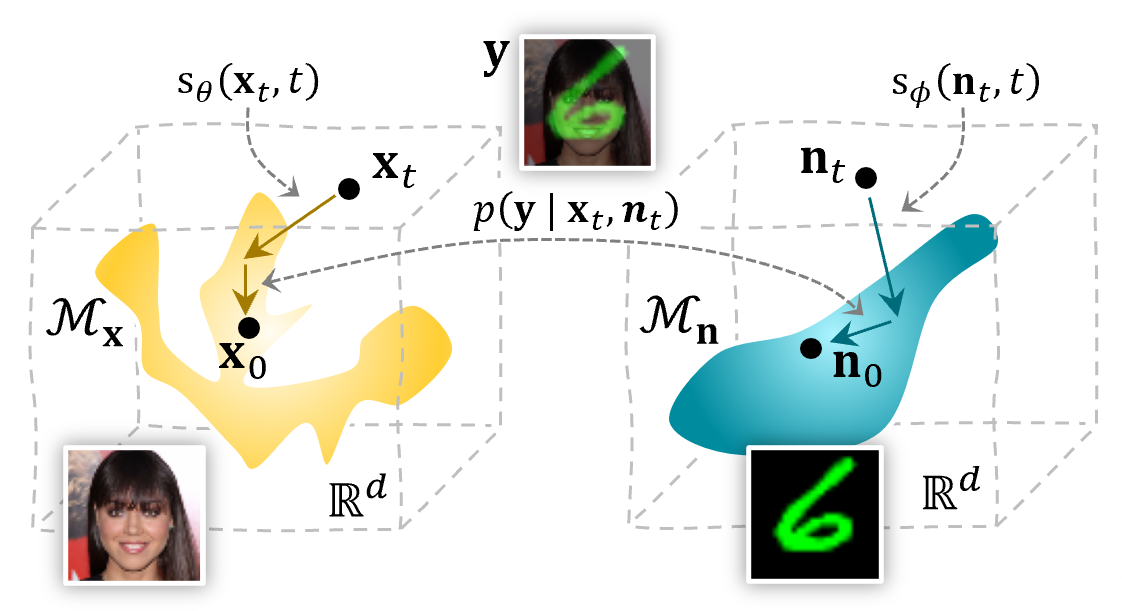

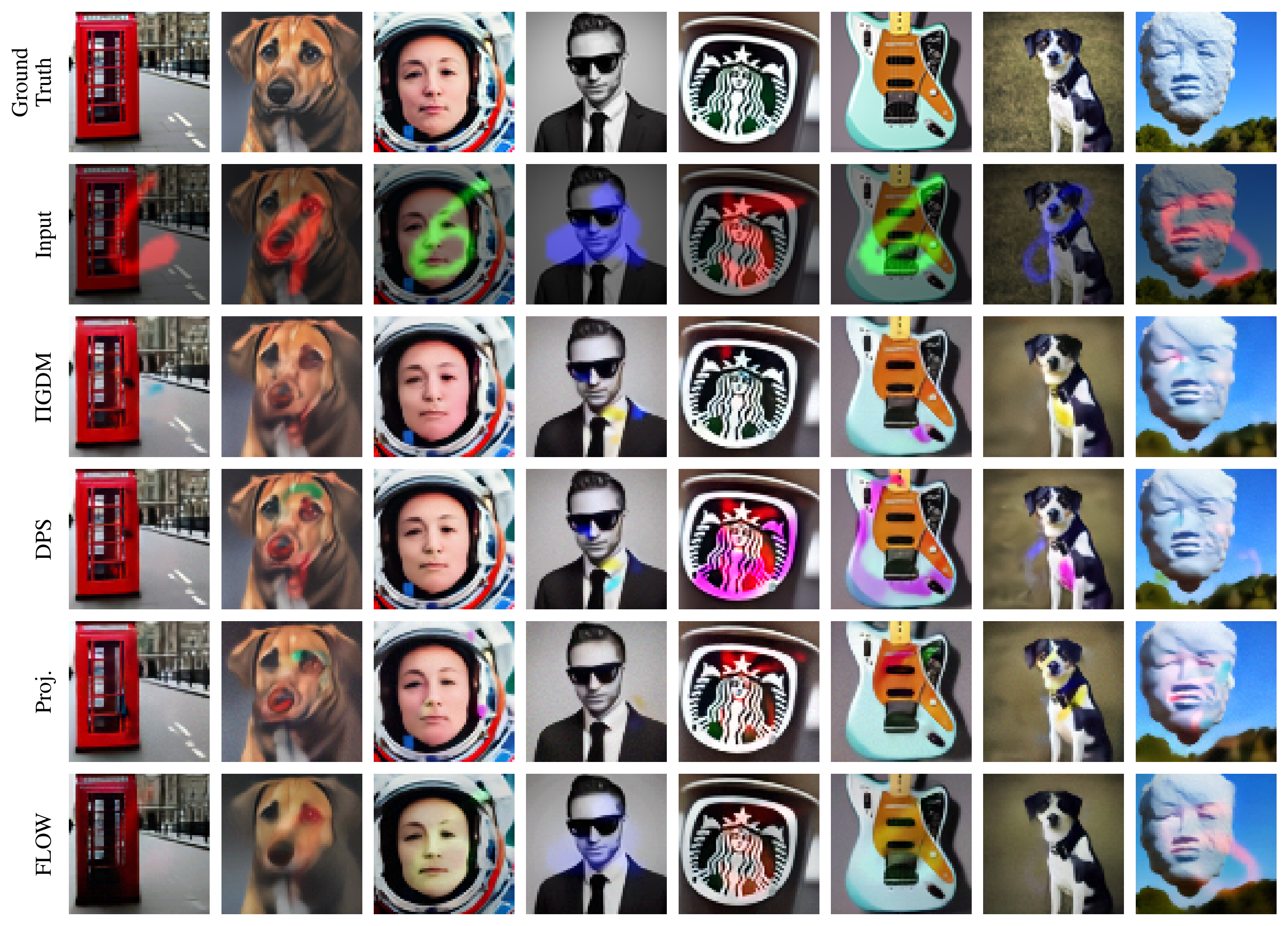

}Overview of the proposed joint posterior sampling method for removing structured noise using diffusion models.

Run the following command with keep_track set to true in the config to run the structured denoising and generate the animation.

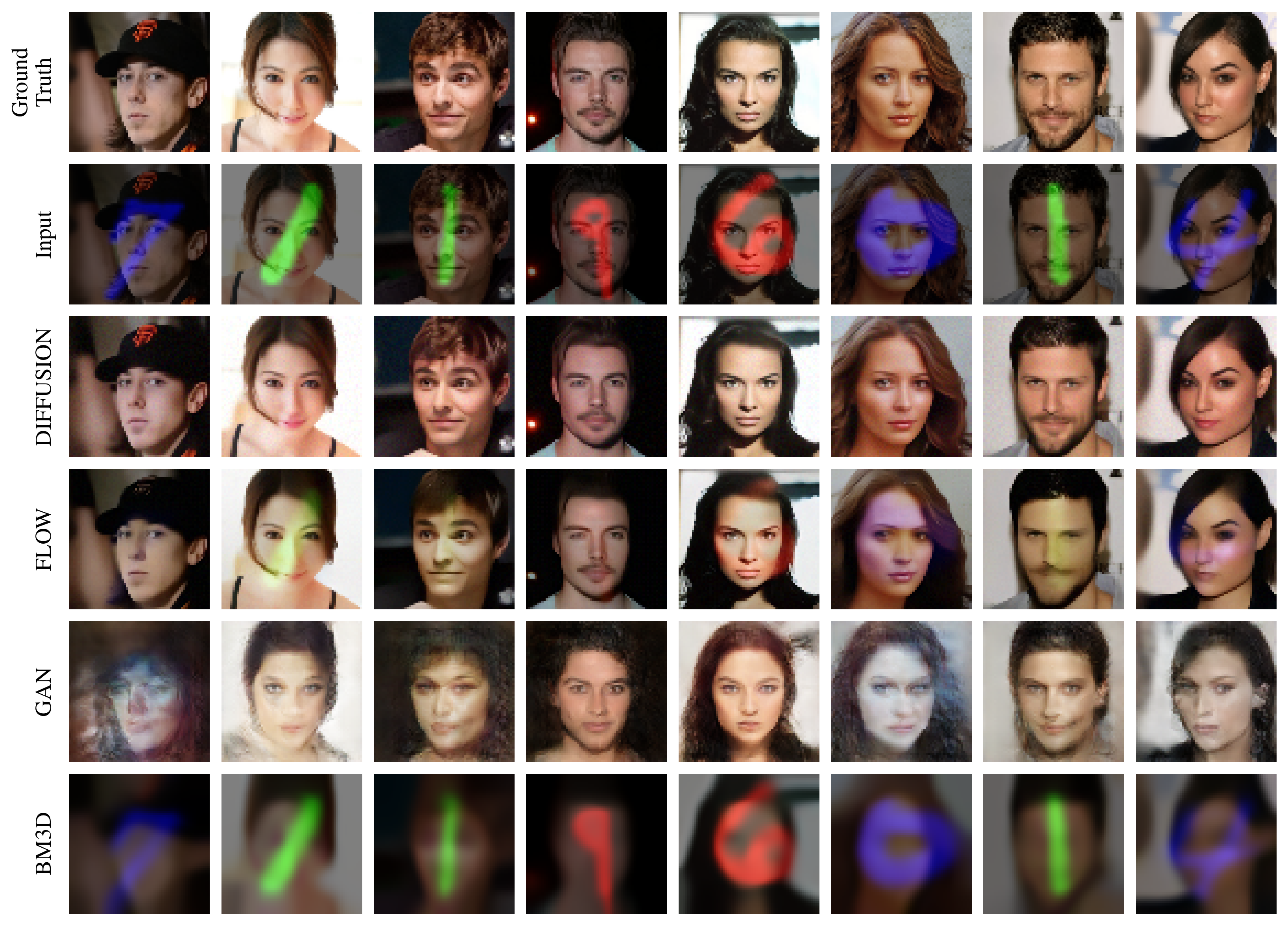

python inference.py -e paper/celeba_mnist_pigdm -t denoise -m sgmStructured denoising with the joint diffusion method.

|

|

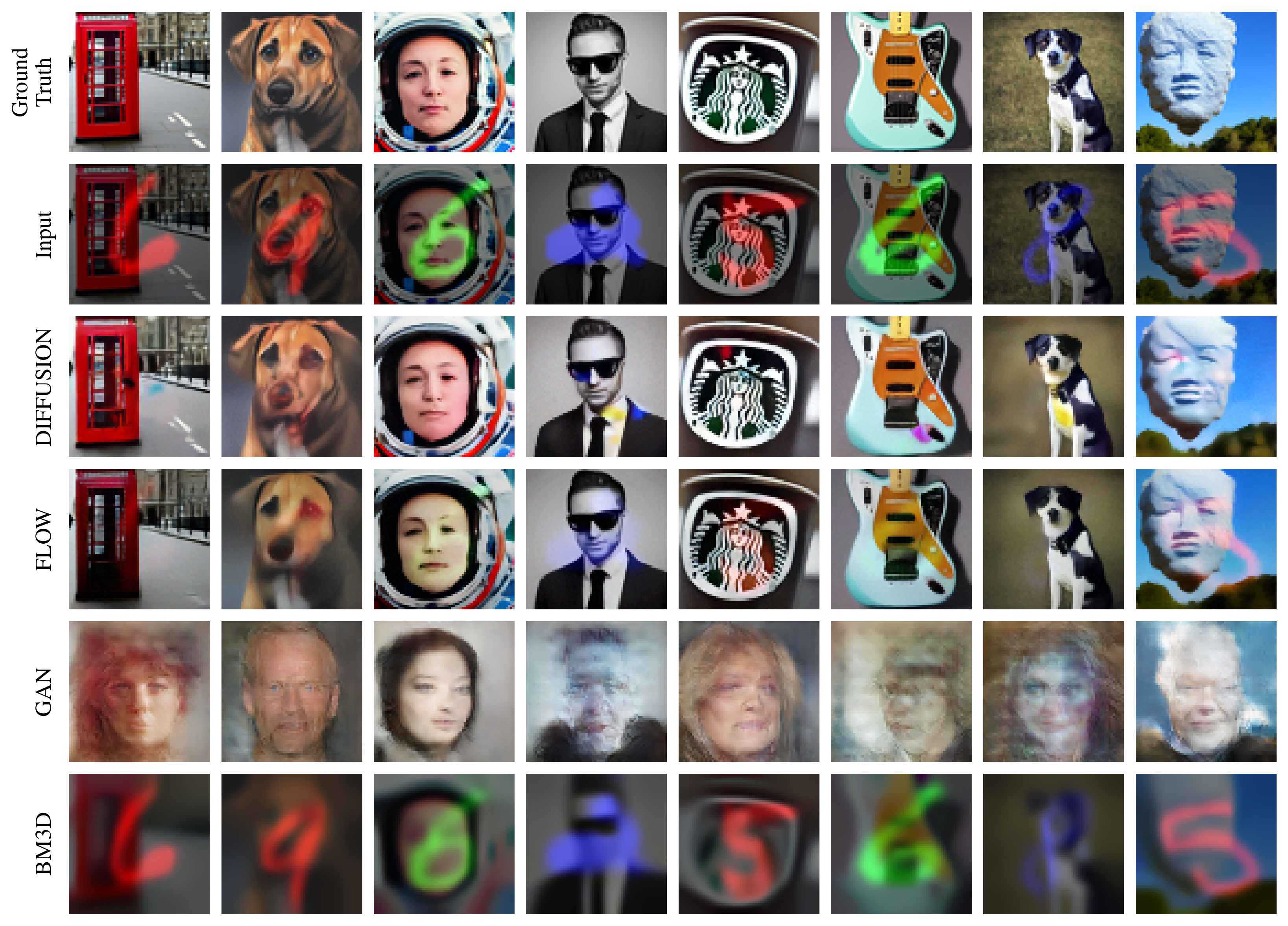

| CelebA | Out-of-distribution dataset |

|

|

| CelebA | Out-of-distribution dataset |

Install an environment with TF2.10 and Pytorch, both GPU enabled. All the other dependencies can be found in the requirements folder. See installation environment for more detailed instructions.

Pretrained weights should be automatically downloaded to the ./checkpoints folder, but can also be manually downloaded here. Each dataset is a different folder in the checkpoints directory and all the different models have a separate nested folder again for each dataset. In those model folders, besides the checkpoint, a training config .yaml file is provided for each trained model (necessary for inference, to build the model again).

Use the inference.py script for inference.

usage: inference.py [-h]

[-e EXPERIMENT]

[-t {denoise,sample,evaluate,show_dataset}]

[-n NUM_IMG]

[-m [MODELS ...]]

[-s SWEEP]

options:

-h, --help show this help message and exit

-e EXPERIMENT, --experiment EXPERIMENT

experiment name located at ./configs/inference/<experiment>)

-t {denoise,sequence_denoise,sample,evaluate,show_dataset,plot_results,run_metrics}, --task

which task to run

-n NUM_IMG, --num_img NUM_IMG

number of images

-m [MODELS ...], --models [MODELS ...]

list of models to run

-s SWEEP, --sweep SWEEP

sweep config located at ./configs/sweeps/<sweep>)

-ef EVAL_FOLDER, --eval_folder EVAL_FOLDER

eval file located at ./results/<eval_folder>)

Example: Main experiment with CelebA data and MNIST corruption:

python inference.py -e paper/celeba_mnist_pigdm -t denoise -m bm3d nlm glow gan sgmDenoising comparison with multiple models:

python inference.py -e paper/mnist_denoising -t denoise -m bm3d nlm glow gan sgmOr to run a sweep

python inference.py -e paper/mnist_denoising -t denoise -m sgm -s sgm_sweepAll working inference configs are found in the ./configs/inference/paper folder. Path to those inference configs (or just the name of them) should be provided to the --experiment flag when calling the inference.py script.

Make sure to set the data_root parameter in the inference config (for instance this config). It is set to the working directory as default. All datasets (for instance CelebA and MNIST) should be (automatically) downloaded and put as a subdirectory to the specified data_root. More information can be found in the datasets.py docstrings.

Install packages in a conda environment with TF2 and Pytorch (latter only needed for the GLOW baseline).

conda create -n joint-diffusion python=3.10

conda activate joint-diffusion

python -m pip install --upgrade pipTo install Tensorflow (installation guide)

conda install -c conda-forge cudatoolkit=11.2 cudnn=8.1.0

python -m pip install "tensorflow<2.11"

# Verify install:

python -c "import tensorflow as tf; print(tf.config.list_physical_devices('GPU'))"To install Pytorch >= 1.13 (installation guide)

conda install pytorch pytorch-cuda=11.7 -c pytorch -c nvidia

conda install cudatoolkit

# Verify install:

python -c "import torch; print(torch.cuda.is_available())"To install the other dependencies

pip install -r requirements/requirements.txt- Our paper https://arxiv.org/abs/2302.05290

- Diffusion model implementation adopted from https://github.com/yang-song/score_sde_pytorch

- Glow and GAN implementations from https://github.com/CACTuS-AI/GlowIP