Custom nodes for ComfyUI allow to inpaint using Brushnet: "BrushNet: A Plug-and-Play Image Inpainting Model with Decomposed Dual-Branch Diffusion".

My contribution is limited to the ComfyUI adaptation, and all credit goes to the authors of the paper.

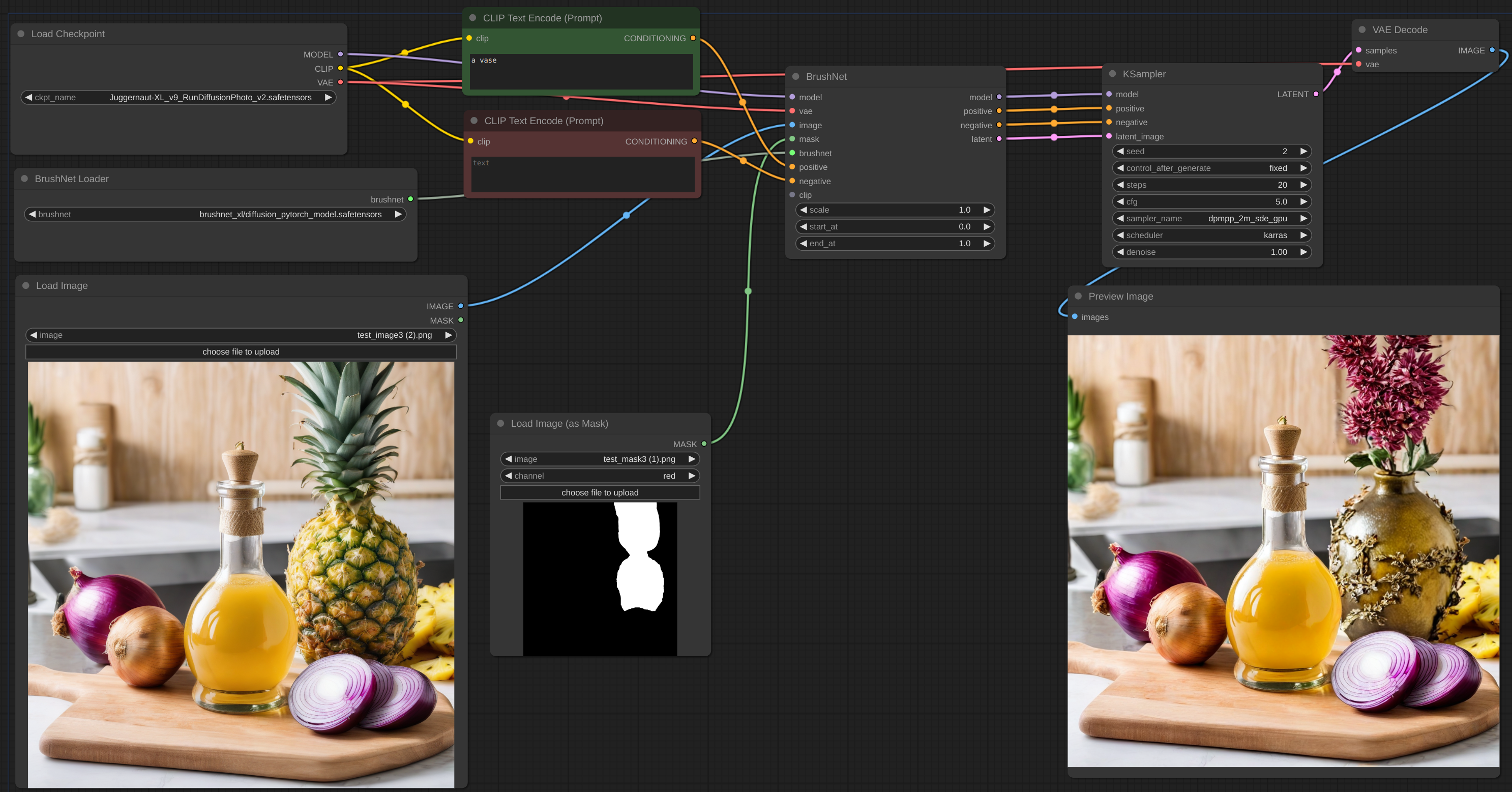

May 2, 2024. BrushNet SDXL is live. It needs positive and negative conditioning though, so workflow changes a little, see example.

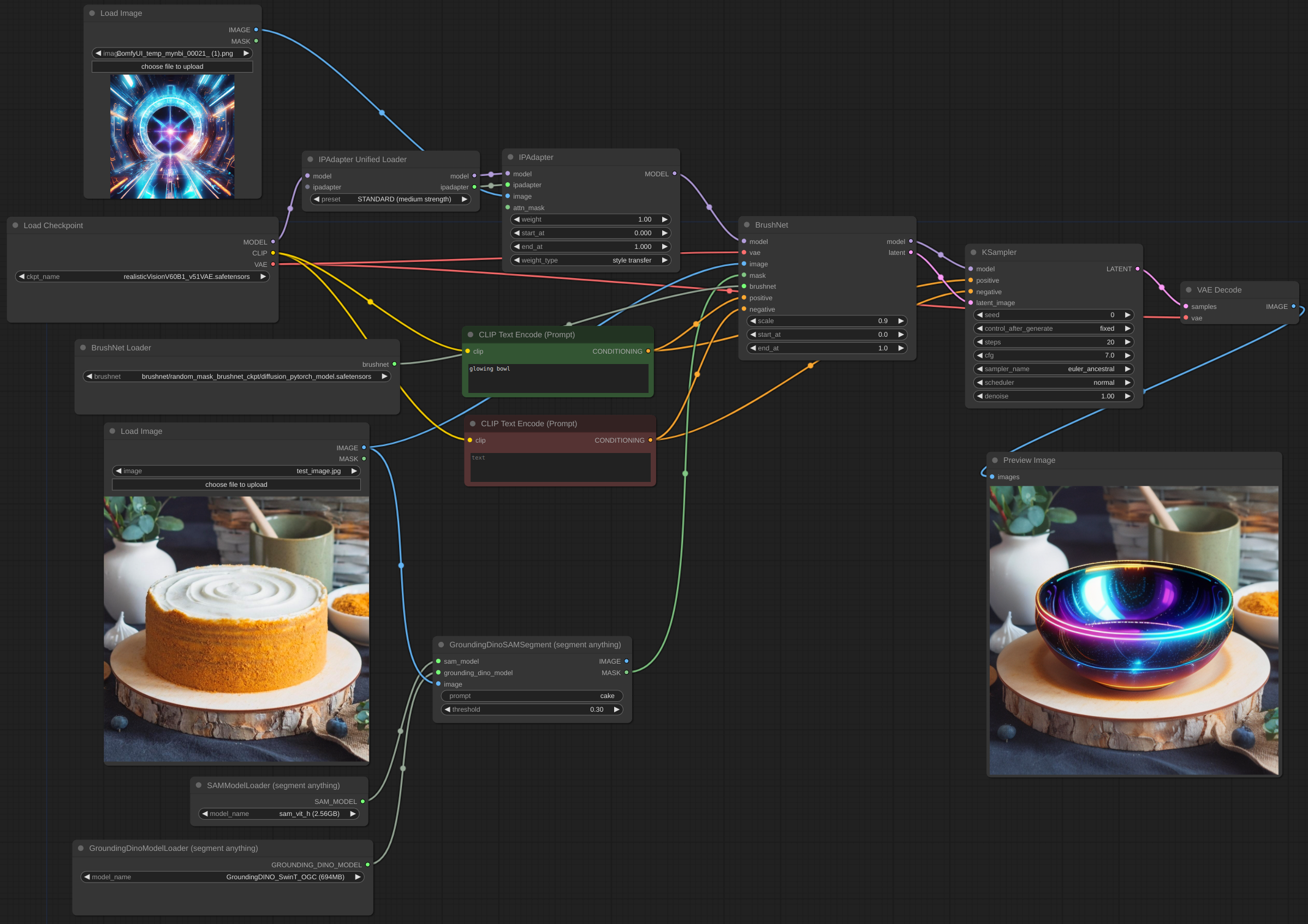

Apr 28, 2024. Another rework, sorry for inconvenience. But now BrushNet is native to ComfyUI. Famous cubiq's IPAdapter Plus is now working with BrushNet! I hope... :) Please, report any bugs you found.

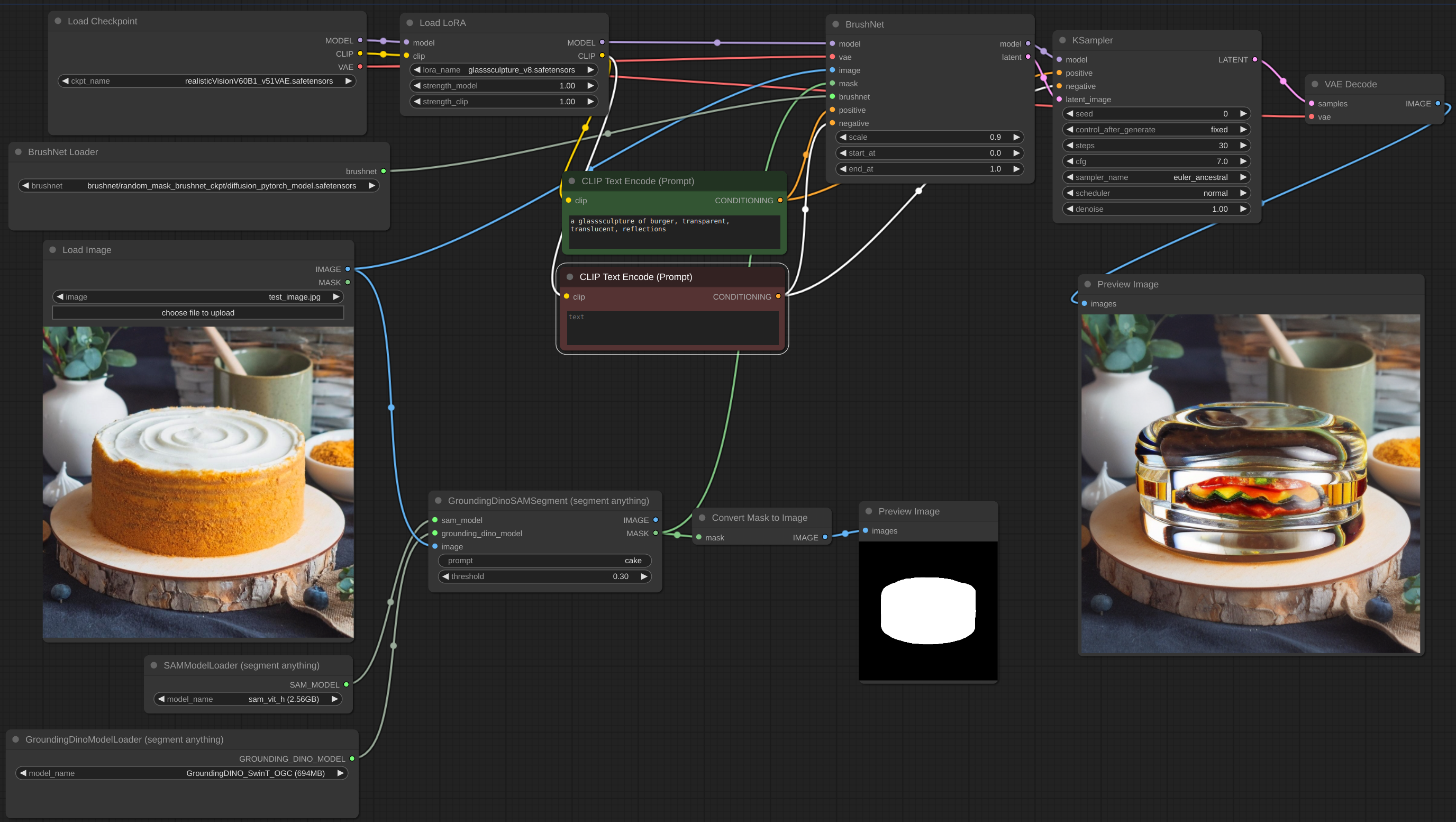

Apr 18, 2024. Complete rework, no more custom diffusers library. It is possible to use LoRA models.

Apr 11, 2024. Initial commit.

- BrushNet SDXL

- PowerPaint v2

- Compatibility with

jank HiDiffusionand similar nodes

Clone the repo into the custom_nodes directory and install the requirements:

git clone https://github.com/nullquant/ComfyUI-BrushNet.git

pip install -r requirements.txt

Checkpoints of BrushNet can be downloaded from here.

The checkpoint in segmentation_mask_brushnet_ckpt provides checkpoints trained on BrushData, which has segmentation prior (mask are with the same shape of objects). The random_mask_brushnet_ckpt provides a more general ckpt for random mask shape.

segmentation_mask_brushnet_ckpt and random_mask_brushnet_ckpt contains BrushNet for SD 1.5 models while

segmentation_mask_brushnet_ckpt_sdxl_v0 and random_mask_brushnet_ckpt_sdxl_v0 for SDXL.

You should place diffusion_pytorch_model.safetensors files to your models/inpaint folder.

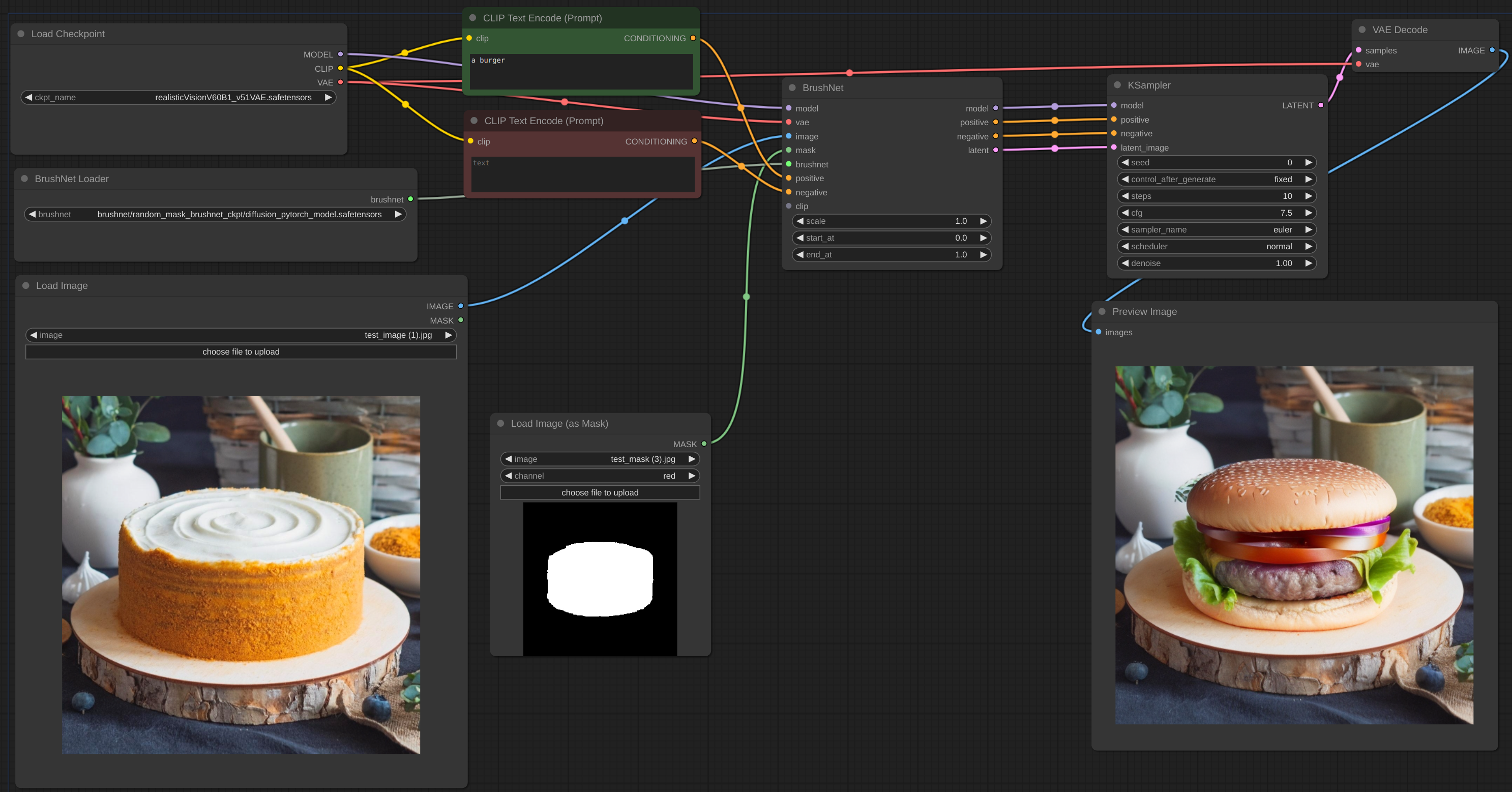

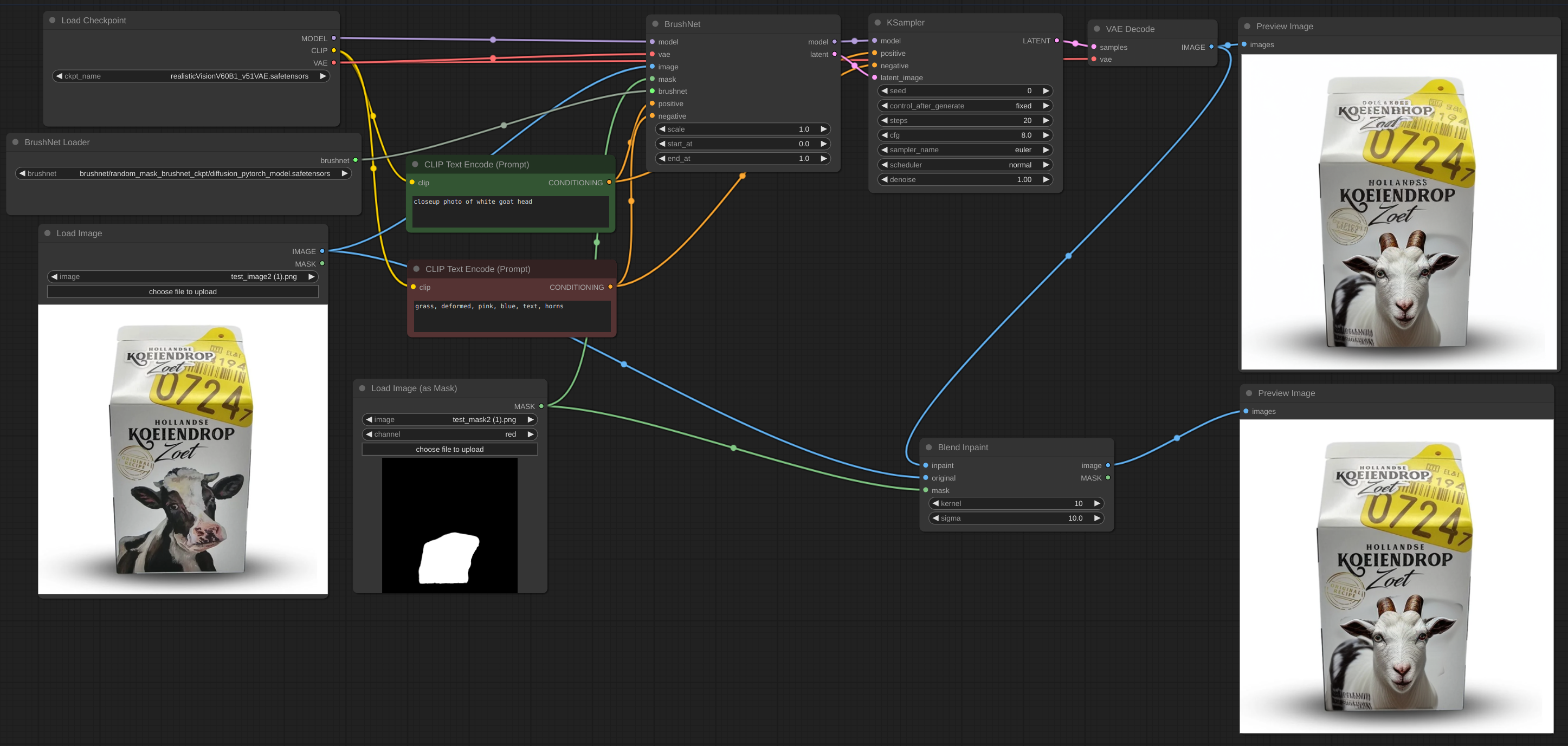

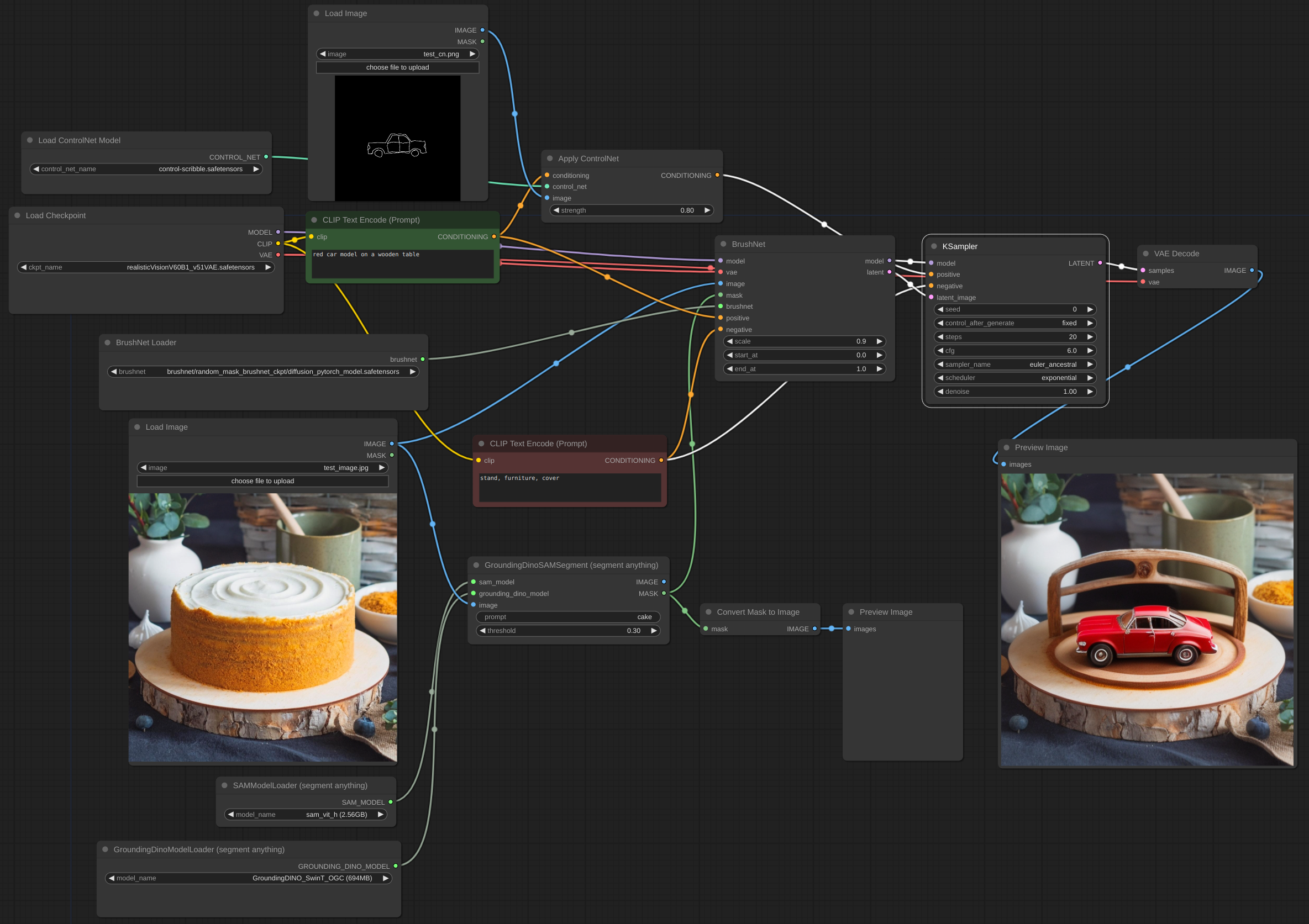

Below is an example for the intended workflow. The workflow for the example can be found inside the 'example' directory.

The latent image can be from BrushNet node or not, but it should be the same size as original image (divided by 8 in latent space).

Blending inpaint

Sometimes inference and VAE broke image, so you need to blend inpaint image with the original: workflow

You can see blurred and broken text after inpainting in the first image and how I suppose to repair it.

Unfortunately, due to the nature of ComfyUI code some nodes are not compatible with these, since we are trying to patch the same ComfyUI's functions.

List of known uncompartible nodes.

I will think how to avoid it.

The code is based on