Task2KB is a task-oriented instructional knowledge base, which offers structured instructions and rich types of related information on tasks across 19 categories. Along with rich task-related information, Task2KB also enables accessing the available knowledge via various retrieval techniques (field-based and dense retrievers).

Additionally, to illustrate the value of Task2KB, we experimentally augment the knowledge into TOD models with two novel development pipelines: (1) fine-tune LLMs with knowledge from Task2KB for boosting TOD model performance, (2) direct context-augmentation with available knowledge. We observe significant and consistent in advancing the performance of recent TOD models.

| Category (Quantity) | Category (Quantity) | Category (Quantity) |

|---|---|---|

| Arts & Entertainment (13,320) | Car & Other Vehicles (4,925) | Computers & Electronics (24,447) |

| Education & Communication (24,530) | Family Life (5,383) | Finance & Business (15,305) |

| Food & Entertaining (9,966) | Health (25,471) | Hobbies & Crafts (22,383) |

| Holidays & Traditions (2,569) | Home & Garden (24,885) | Pets & Animals (15,087) |

| Philosophy & Religion (2,872) | Relationship (7,880) | Sports & Fitness (10,094) |

| Style (18,854) | Travel (4,826) | Work (11,524) |

| Youth (7,112) |

To access the Task2KB, we enable a quick access via json files that saved in Google drive link. We further illustrate multiple applications of Task2KB.

Aside from the available knowledge of tasks that we collected from WikiHow, for each task, we also identify and share its related attributes that have the potential of being task slots for task completion. One example list of attributes is ['packaging', 'fridging method', 'shipping policy'] for the task of 'How to ship food'. The attribute identification strategy can be summarised into following steps:

- Entity tagging with TagMe that identifies entities can link to a specific wikipedia webpage.

- Aggregate section titles or names, which are meaningful summarise or actions, as candidate attributes.

- Compare the semantic similarity, via pre-trained BERT model, between task titles and the candidate attributes and use the top-5 similar attributes as the finalised attributes for a task.

The resulting attribute data can be accessed here.

Task2KB is capable of generating synthetic task-oriented conversational datasets with its step-wise instructions. We public available the implementation from fine-tuning a dialogue generator in T5 or Flan-T5, as well as the dialogue generation code. In addition, we show how we can further fine-tune a distilgpt2 model for response generation.

The shared code in data_processing folder includes the implementation that use three candidate conversational datasets, ORConvQA, QReCC and MultiWoZ, for the comparison of using different training data for developing dialog generators.

Here we also publicly available the implementations of using topic sentences or using sentence with high specificness for dialogue generation.

Next, in question_generator_train, we also explore various strategies in generating the synthetic dialogues, such as using the Flan-T5 model that trained on ORConvQA dialogues and then further fine-tuned on the MultiWoZ dataset (i.e., Flan_T5_OR_MultiWoZ.py). We also publicly available the saved question_generation_model, which is trained_on_multiwoz: https://drive.google.com/file/d/1H_dMut5HV72as8zkLV_w07Tz8HkM-OYA/view?usp=share_link

After having the ability in dialogue generation or progressively generating questions with step description as answers, we move to the implementation of generating synthetic dialogues, which uses the last generated pair of question and answer as context and step-wisely generating full dialogues (see dialogue_generation.py).

Next, with the generated dialogues (INST2DIAL-Auto), we can fine-tune a large language model (e.g., distilgpt2) for task-oriented response generation (in distilgpt2-train-RespGen.py). The fine-tuned distilGPT2 model is also available here: link.

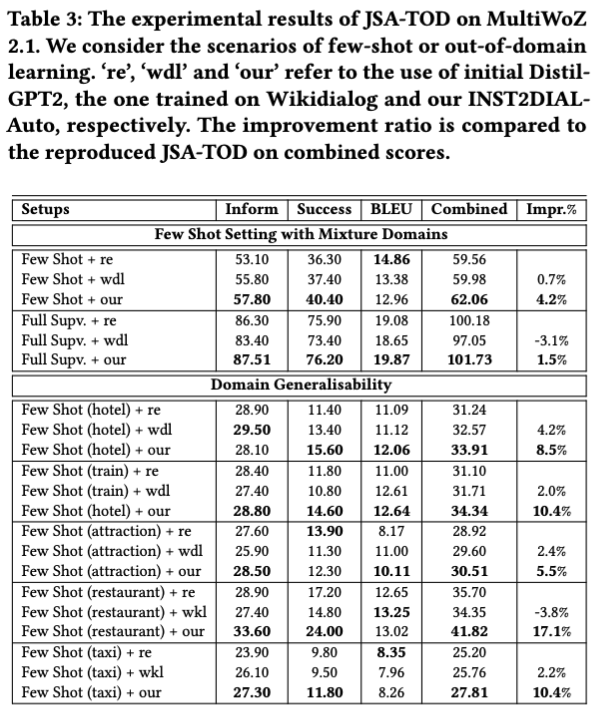

In particular, to show the effectiveness of using Task2KB, we experimentally compare with the use if wikidialog that was generated using wikipedia passages. The corresponding checkpoint is also available link. Then, we use the fine-tuned distilgpt2in two recent advanced task-oriented conversational model (UBAR and JSA-TOD), and compare the performance differences:

| Experimental Results of UBAR & variants | Experimental Results of JSATOD & variants |

|---|---|

|

|

On the other hand, to enable a direct use of Task2KB, we also implment two document indexing methods: field-based indexing and dense indexing:

The field-based indexing leverages the task steps, task descriptions and task summary as

The implmentation is available here, which builds the index via Lucene 5.5.0.

In addition, we also publicly available the resource to be downloaded via this link.

The dense indexing and knowledge access are implemented with a joined effort of Facebook Faiss and Dense Passgae Retrieval (DPR). To balance the document length and information specificity, we structure each step of task instructions into the following format:

id [tab] introduction + step description [tab] Task title

Afterwards, we use the `generate_dense_embeddings.py' script in ParlAI to encode the information and running:

python generate_dense_embeddings.py -mf zoo:hallucination/multiset_dpr/hf_bert_base.cp --dpr-model True --passages-file step_info_cl.tsv

--outfile task2kb_index/step_info --num-shards 50 --shard-id 0 -bs 32

The `step_info_cl.tsv' file can be obtained via the following link. Next, we use the exact indexing type from ParlAI as well in addressing the indexing implementation as follows:

python index_dense_embeddings.py --retriever-embedding-size 768 \

--embeddings-dir task2kb_index/ --embeddings-name task2kb --indexer-type exact

Then, the resulting index of the dense embedding allow the quick access of task-oriented information for deploying knowledge-augmented task-oriented conversational models.