This repository contains the implementation of our paper:

NeRF-LOAM: Neural Implicit Representation for Large-Scale Incremental LiDAR Odometry and Mapping (PDF)

Junyuan Deng, Qi Wu, Xieyuanli Chen, Songpengcheng Xia, Zhen Sun, Guoqing Liu, Wenxian Yu and Ling Pei

If you use our code in your work, please star our repo and cite our paper.

@inproceedings{deng2023nerfloam,

title={NeRF-LOAM: Neural Implicit Representation for Large-Scale Incremental LiDAR Odometry and Mapping},

author={Junyuan Deng and Qi Wu and Xieyuanli Chen and Songpengcheng Xia and Zhen Sun and Guoqing Liu and Wenxian Yu and Ling Pei},

booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision},

year={2023}

}

- Our incrementally simultaneous odometry and mapping results on the Newer College dataset and the KITTI dataset sequence 00.

- The maps are dense with a form of mesh, the red line indicates the odometry results.

- We use the same network without training to prove the ability of generalization of our design.

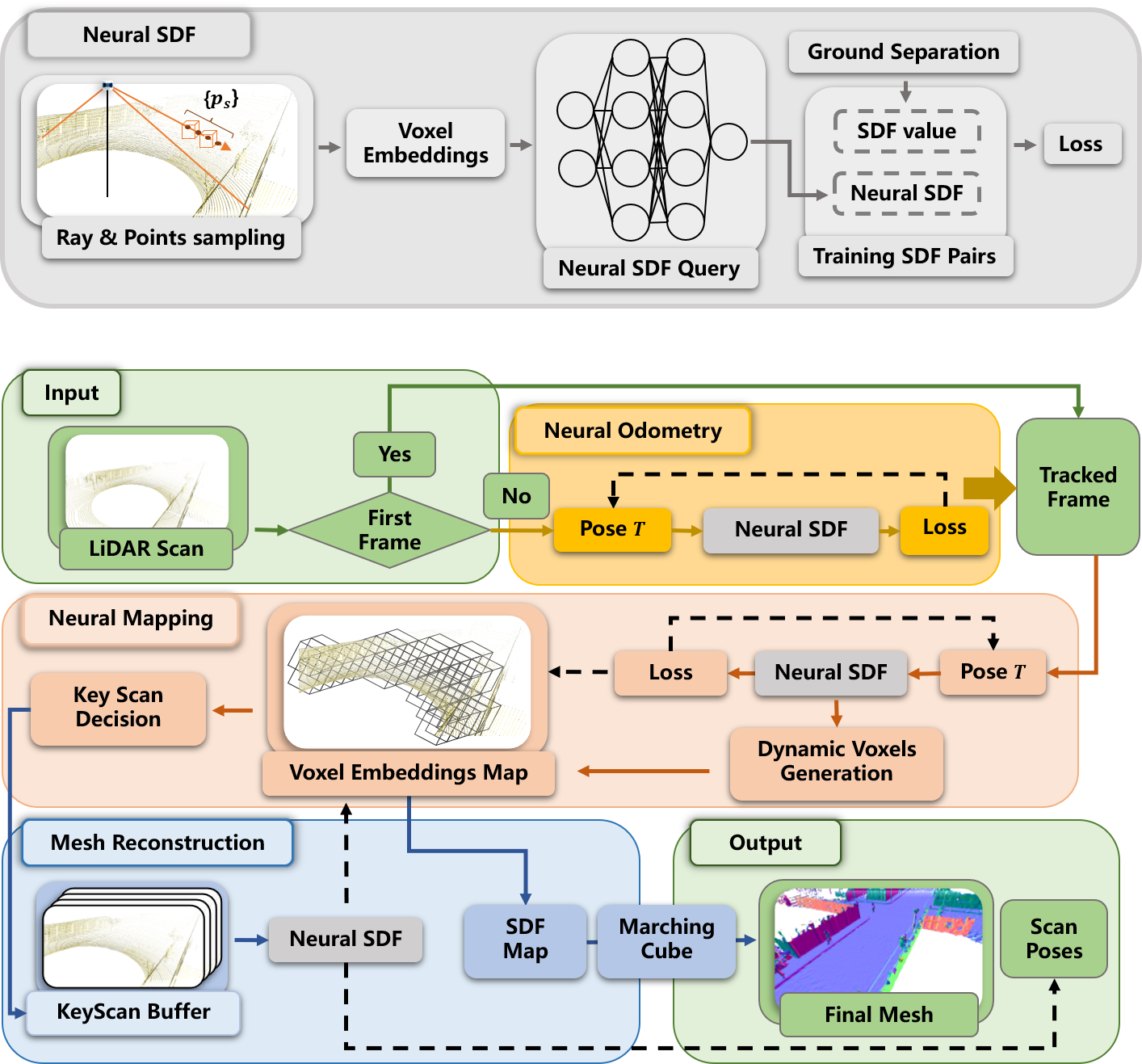

Overview of our method. Our method is based on our neural SDF and composed of three main components:

- Neural odometry takes the pre-processed scan and optimizes the pose via back projecting the queried neural SDF;

- Neural mapping jointly optimizes the voxel embeddings map and pose while selecting the key-scans;

- Key-scans refined map returns SDF value and the final mesh is reconstructed by marching cube.

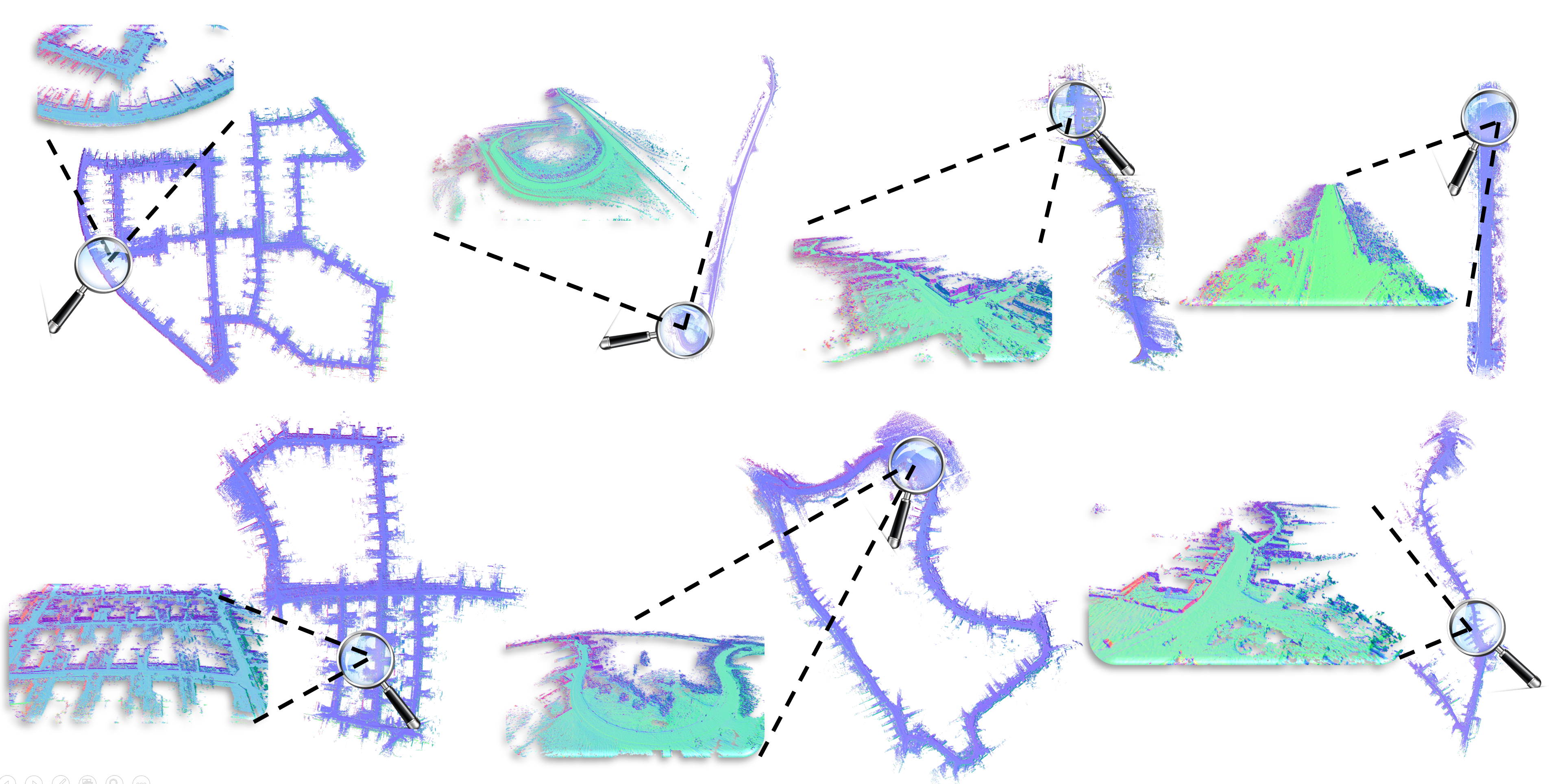

The reconstructed maps

The qualitative result of our odometry mapping on the KITTI dataset. From left upper to right bottom, we list the results of sequences 00, 01, 03, 04, 05, 09, 10.

The qualitative result of our odometry mapping on the KITTI dataset. From left upper to right bottom, we list the results of sequences 00, 01, 03, 04, 05, 09, 10.

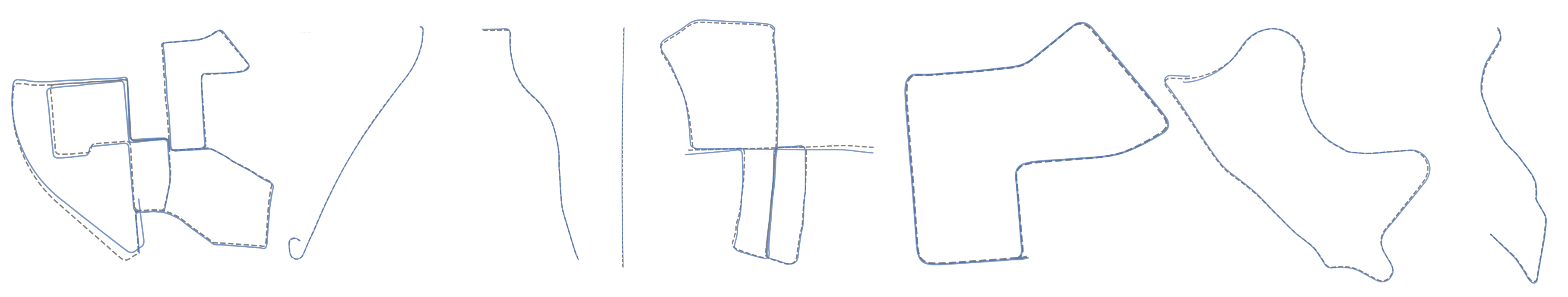

The odometry results

The qualitative results of our odometry on the KITTI dataset. From left to right, we list the results of sequences 00, 01, 03, 04, 05, 07, 09, 10. The dashed line corresponds to the ground truth and the blue line to our odometry method.

The qualitative results of our odometry on the KITTI dataset. From left to right, we list the results of sequences 00, 01, 03, 04, 05, 07, 09, 10. The dashed line corresponds to the ground truth and the blue line to our odometry method.

-

Newer College real-world LiDAR dataset: website.

-

MaiCity synthetic LiDAR dataset: website.

-

KITTI dataset: website.

To run the code, a GPU with large memory is preferred. We tested the code with RTX3090 and GTX TITAN.

We use Conda to create a virtual environment and install dependencies:

-

python environment: We tested our code with Python 3.8.13

-

Pytorch: The Version we tested is 1.10 with cuda10.2 (and cuda11.1)

-

Other depedencies are specified in requirements.txt. You can then install all dependancies using

piporconda:

pip3 install -r requirements.txt

- After you have installed all third party libraries, run the following script to build extra Pytorch modules used in this project.

sh install.sh- Replace the filename in mapping.py with the built library

torch.classes.load_library("third_party/sparse_octree/build/lib.xxx/svo.xxx.so")-

patchwork-plusplus to separate gound from LiDAR points.

-

Replace the filename in src/dataset/*.py with the built library

patchwork_module_path ="/xxx/patchwork-plusplus/build/python_wrapper"- The full dataset can be downloaded as mentioned. You can also download the example part of dataset, use these scripts to download.

- Take maicity seq.01 dataset as example: Modify

configs/maicity/maicity_01.yamlso the data_path section points to the real dataset path. Now you are all set to run the code:

python demo/run.py configs/maicity/maicity_01.yaml

- For kitti dataset, if you want to process it more fast, you can switch to branch

subscene:

git checkout subscene

- Then run with

python demo/run.py configs/kitti/kitti_00.yaml - This branch cut the full scene into subscenes to speed up and concatenate them together. This will certainly add map inconsistency and decay tracking accuracy...

- We follow the evaluation proposed here, but we did not use the

crop_intersection.py

Some of our codes are adapted from Vox-Fusion.

Any questions or suggestions are welcome!

Junyuan Deng: [email protected] and Xieyuanli Chen: [email protected]

This project is free software made available under the MIT License. For details see the LICENSE file.