PyTorch implementation for the paper "AU-aware graph convolutional network for Macro- and Micro-expression spotting" (ICME-2023, Poster): IEEE version, arXiv version.

The code is modified from USTC_ME_Spotting .

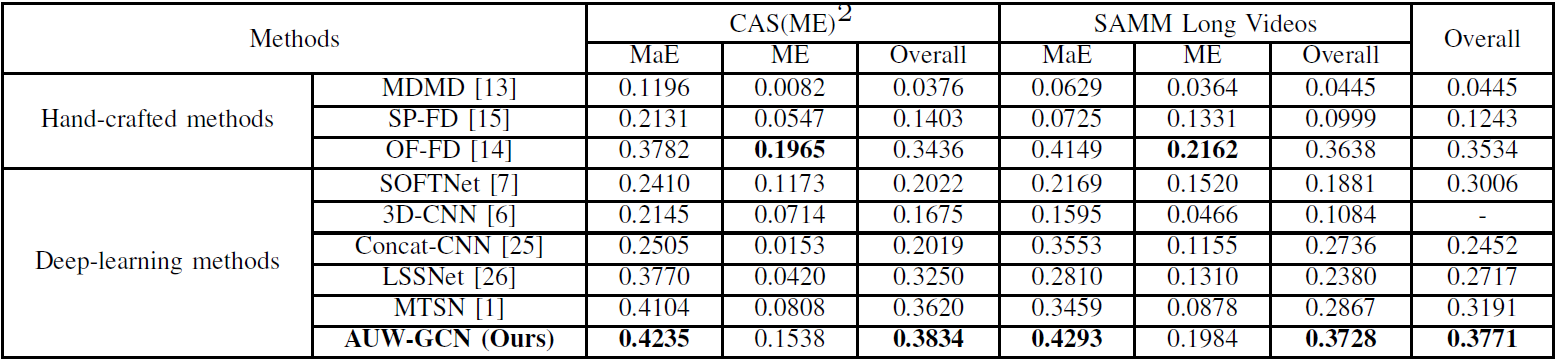

We compare our method against others on two benchmark datasets, i.e., CAS(ME)2 and SAMM-LV in terms of F1-Score:

OS: Ubuntu 20.04.4 LTS

Python: 3.8

Pytorch: 1.10.1

CUDA: 10.2, cudnn: 7.6.5

GPU: NVIDIA GeForce RTX 2080 Ti

- Clone this repository

$ git clone [email protected]:xjtupanda/AUW-GCN.git

$ cd AUW-GCN- Prepare environment

$ conda create -n env_name python=3.8

$ conda activate env_name

$ pip install -r requirements.txt- Download features

For the features of SAMM-LV and CAS(ME)^2 datasets, please download features.tar.gz (Modified from USTC_ME_Spotting#features-and-config-file) and extract it:

$ tar -xf features.tar.gz -C dir_to_save_featureAfter downloading the feature files, the variables of feature path, segment_feat_root, in config.yaml should be modified accordingly.

- Training and Inference

Set SUB_LIST,

OUTPUT (dir for saving ckpts, log and results)

and DATASET ( ["samm" | "cas(me)^2"] ) in pipeline.sh, then run:

$ bash pipeline.shWe also provide ckpts, logs, etc. to reproduce the results in the paper, please download ckpt.tar.gz.

Check make_coc_matrix.py.

This part of the code is in ./feature_extraction

- Download model checkpoints checkpoint.zip, extract it to the

feature_extractiondir and move thefeature_extraction/checkpoint/Resnet50_Final.pthfile to thefeature_extraction/retinafacedir - Set path and other settings in config.yaml

- Run new_all.py

Special credit to whcold as this part of the code is mainly written by him.

If you find this project helpful to your research, please cite our work.

@inproceedings{yin2023aware,

title={AU-aware graph convolutional network for Macro- and Micro-expression spotting},

author={Yin, Shukang and Wu, Shiwei and Xu, Tong and Liu, Shifeng and Zhao, Sirui and Chen, Enhong},

booktitle={2023 IEEE International Conference on Multimedia and Expo (ICME)},

pages={228--233},

year={2023},

organization={IEEE}

}