forked from taichi-dev/taichi

-

Notifications

You must be signed in to change notification settings - Fork 0

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

[llvm] Fix broken llvm module on CUDA backend when TI_LLVM_15 is on (t…

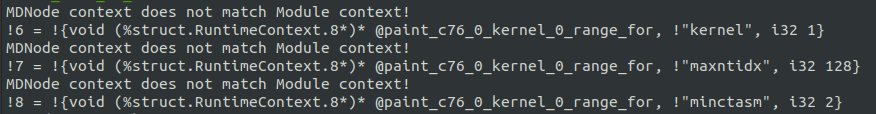

…aichi-dev#6458) Issue: taichi-dev#6447 ### Brief Summary Previously we inject some cuda kernel metadata after linking, that was problematic since when you modify metadata for a llvm::Function you'll have to use the exactly same LLVMContext it was created in. Thus we failed verifyModule check from LLVM15. And we happened to only TI_WARN there so didn't notice this issue.  This PR fixes it by moving the mark as CUDA step up to parallel compilation so that it was guaranteed to be in the same LLVMContext.

- Loading branch information

Showing

3 changed files

with

10 additions

and

7 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters