Ansible playbook that installs a CDH 4.6.0 Hadoop cluster (running on Java 7, supported from CDH 4.4), with HBase, Hive, Presto for analytics, and Ganglia, Smokeping, Fluentd, Elasticsearch and Kibana for monitoring and centralized log indexing.

Follow @analytically. Browse the CI build screenshots.

- Ansible 1.5 or later (

pip install ansible) - 6 + 1 Ubuntu 12.04 LTS/13.04/13.10 or Debian "wheezy" hosts - see ubuntu-netboot-tftp if you need automated server installation

- Mandrill username and API key for sending email notifications

ansibleruser in sudo group without sudo password prompt (see Bootstrapping section below)

Cloudera (CDH4) Hadoop Roles

If you're assembling your own Hadoop playbook, these roles are available for you to reuse:

cdh_common- sets up Cloudera's Ubuntu repository and keycdh_hadoop_common- common packages shared by all Hadoop nodescdh_hadoop_config- common configuration shared by all Hadoop nodescdh_hadoop_datanode- installs Hadoop DataNodecdh_hadoop_journalnode- installs Hadoop JournalNodecdh_hadoop_mapreduce- installs Hadoop MapReducecdh_hadoop_mapreduce_historyserver- installs Hadoop MapReduce history servercdh_hadoop_namenode- installs Hadoop NameNodecdh_hadoop_yarn_nodemanager- installs Hadoop YARN node managercdh_hadoop_yarn_proxyserver- installs Hadoop YARN proxy servercdh_hadoop_yarn_resourcemanager- installs Hadoop YARN resource managercdh_hadoop_zkfc- installs Hadoop Zookeeper Failover Controllercdh_hbase_common- common packages shared by all HBase nodescdh_hbase_config- common configuration shared by all HBase nodescdh_hbase_master- installs HBase-Mastercdh_hbase_regionserver- installs HBase RegionServercdh_hive_common- common packages shared by all Hive nodescdh_hive_config- common configuration shared by all Hive nodescdh_hive_metastore- installs Hive metastore (with PostgreSQL database)cdh_zookeeper_server- installs ZooKeeper Server

Facebook Presto Roles

presto_common- downloads Presto to /usr/local/presto and prepares the node configurationpresto_coordinator- installs Presto coordinator configpresto_worker- installs Presto worker config

Set the following variables using --extra-vars or editing group_vars/all:

Required:

site_name- used as Hadoop nameservices and various directory names. Alphanumeric only.

Optional:

- Network interface: if you'd like to use a different IP address per host (eg. internal interface), change

site.ymland changeset_fact: ipv4_address=...to determine the correct IP address to use per host. If this fact is not set,ansible_default_ipv4.addresswill be used. - Email notification:

notify_email,postfix_domain,mandrill_username,mandrill_api_key roles/common:kernel_swappiness(0),nofilelimits, ntp servers andrsyslog_polling_interval_secs(10)roles/2_aggregated_links:bond_mode(balance-alb) andmtu(9216)roles/cdh_hadoop_config:dfs_blocksize(268435456),max_xcievers(4096),heapsize(12278)

Edit the hosts file and list hosts per group (see Inventory for more examples):

[datanodes]

hslave010

hslave[090:252]

hadoop-slave-[a:f].example.com

Make sure that the zookeepers and journalnodes groups contain at least 3 hosts and have an odd number of hosts.

Since we're using unicast mode for Ganglia (which significantly reduces chatter), you may have to wait 60 seconds after node startup before it is seen/shows up in the web interface.

To run Ansible:

./site.shTo e.g. just install ZooKeeper, add the zookeeper tag as argument (available tags: apache, bonding, configuration,

elasticsearch, elasticsearch_curator, fluentd, ganglia, hadoop, hbase, hive, java, kibana, ntp, postfix, postgres, presto,

rsyslog, tdagent, zookeeper):

./site.sh zookeeper- To improve performance, sysctl tuning

- link aggregation configures Link Aggregation if 2 interfaces are available

- htop, curl, checkinstall, heirloom-mailx, intel-microcode/amd64-microcode, net-tools, zip

- NTP configured with the Oxford University NTP service by default

- Postfix with Mandrill configuration

- unattended upgrades email to inform success/failure

- php5-cli, sysstat, hddtemp to report device metrics (reads/writes/temp) to Ganglia every 10 minutes

- LZO (Lempel–Ziv–Oberhumer) and Google Snappy 1.1.1 compression

- a fork of openjdk's FloatingDecimal to fix monitor contention when parsing doubles due to a static synchronized method

- Elasticsearch Curator, defaults to maximum 30 GB of data in Elasticsearch, via cron daily at 2:00AM

- Elasticsearch Marvel, monitor your Elasticsearch cluster's heartbeat

- SmokePing to keep track of network latency

After the installation, go here:

- Ganglia at monitor01/ganglia

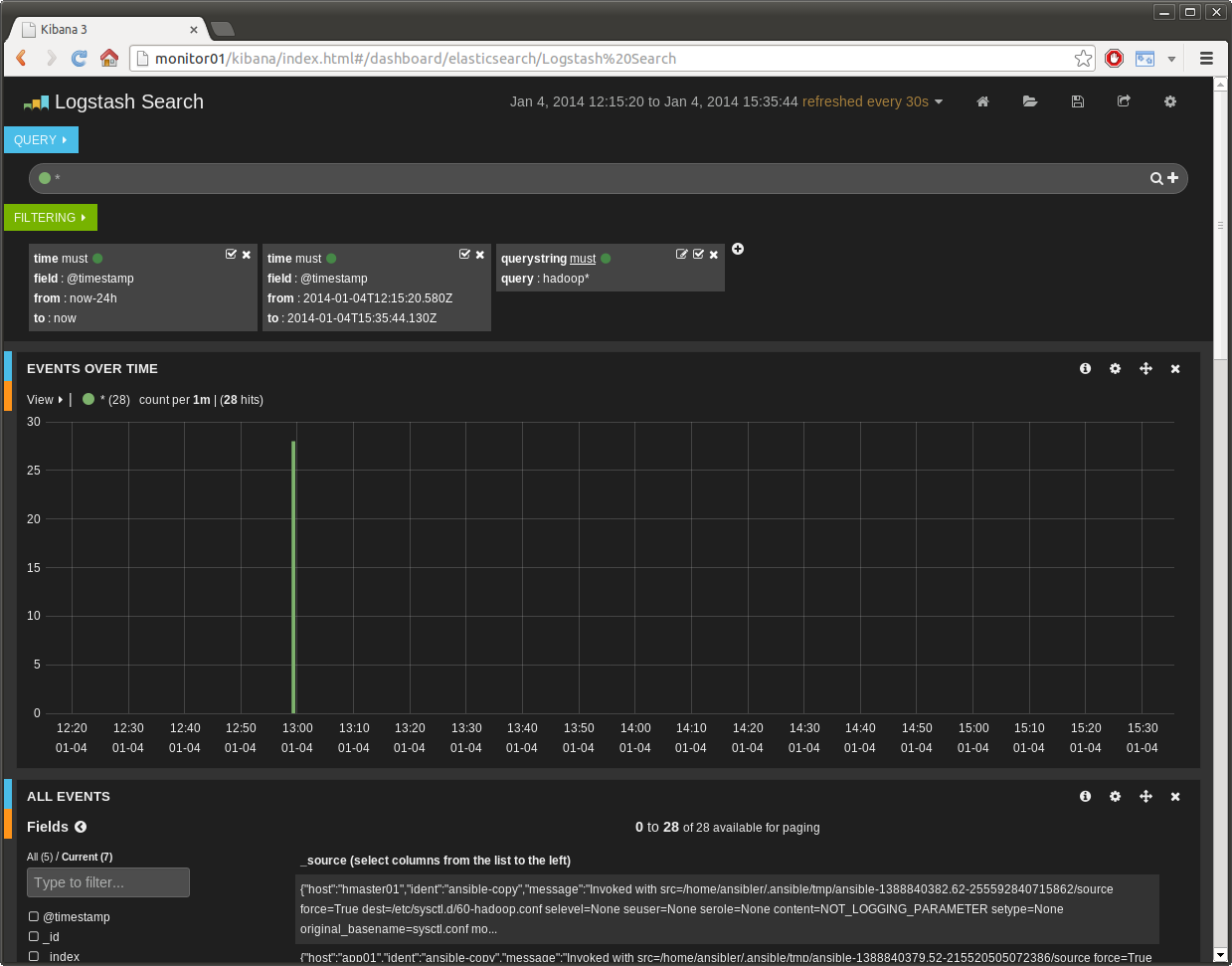

- Kibana at monitor01/kibana/index.html#/dashboard/file/logstash.json

- Smokeping at monitor01/smokeping/smokeping.cgi

- hmaster01 at hmaster01:50070 - active namenode

- hmaster02 at hmaster02:50070 - standby namenode

- Presto at hmaster02:8081 - Presto coordinator

Instructions on how to test the performance of your CDH4 cluster.

- SSH into one of the machines.

- Change to the

hdfsuser:sudo su - hdfs - Set HADOOP_MAPRED_HOME:

export HADOOP_MAPRED_HOME=/usr/lib/hadoop-mapreduce cd /usr/lib/hadoop-mapreduce

hadoop jar hadoop-mapreduce-examples.jar teragen -Dmapred.map.tasks=1000 10000000000 /tera/into run TeraGenhadoop jar hadoop-mapreduce-examples.jar terasort /tera/in /tera/outto run TeraSort

hadoop jar hadoop-mapreduce-client-jobclient-2.0.0-cdh4.6.0-tests.jar TestDFSIO -write

Paste your public SSH RSA key in bootstrap/ansible_rsa.pub and run bootstrap.sh to bootstrap the nodes

specified in bootstrap/hosts. See bootstrap/bootstrap.yml for more information.

You can manually install additional components after running this playbook. Follow the official CDH4 Installation Guide.

Licensed under the Apache License, Version 2.0.

Copyright 2013-2014 Mathias Bogaert.