Domain-Guided Conditional Diffusion Model for Unsupervised Domain Adaptation (Accepted by Neural Networks)

Official PyTorch implementation of Domain-Guided Conditional Diffusion Model for Unsupervised Domain Adaptation

Yulong Zhang*, Shuhao Chen*, Weisen Jiang, Yu Zhang, Jiangang Lu, James T. Kwok.

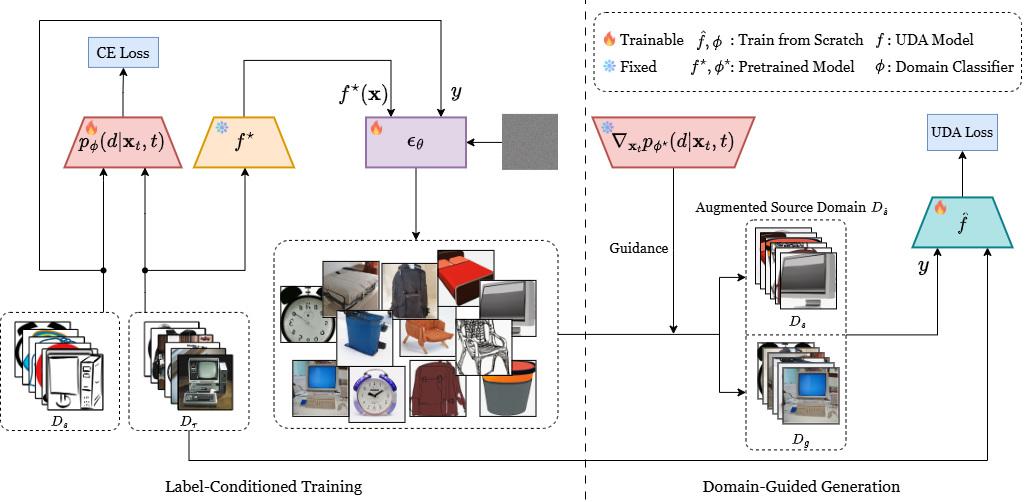

Limited transferability hinders the performance of a well-trained deep learning model when applied to new application scenarios. Recently, Unsupervised Domain Adaptation (UDA) has achieved significant progress in addressing this issue via learning domain-invariant features. However, the performance of existing UDA methods is constrained by the possibly large domain shift and limited target domain data. To alleviate these issues, we propose a Domain-guided Conditional Diffusion Model (DCDM), which generates high-fidelity target domain samples, making the transfer from source domain to target domain easier. DCDM introduces class information to control labels of the generated samples, and a domain classifier to guide the generated samples towards the target domain. Extensive experiments on various benchmarks demonstrate that DCDM brings a large performance improvement to UDA.

You can find scripts in the directory scripts. The code for UDA method: MCC, ELS, SSRT.

If you have any problem with our code or have some suggestions, including the future feature, feel free to contact

- Yulong Zhang ([email protected])

or describe it in Issues.

Our implementation is based on the ED-DPM, Guided-diffusion, dpm-solver.

If you find our paper or codebase useful, please consider citing us as:

@article{zhang2023domain,

title = {Domain-guided conditional diffusion model for unsupervised domain adaptation},

author = {Yulong Zhang and Shuhao Chen and Weisen Jiang and Yu Zhang and Jiangang Lu and James T. Kwok},

journal = {Neural Networks},

pages = {107031},

year = {2024},

issn = {0893-6080},

doi = {https://doi.org/10.1016/j.neunet.2024.107031}

}