forked from facebookresearch/DensePose

-

Notifications

You must be signed in to change notification settings - Fork 0

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

draft for 2019 COCO DensePose challenge

- Loading branch information

Showing

5 changed files

with

363 additions

and

0 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,96 @@ | ||

| # Data Format | ||

|

|

||

| The annotations are stored in [JSON](http://json.org/). Please note that | ||

| [COCO API](https://github.com/cocodataset/cocoapi) described on the | ||

| [download](http://cocodataset.org/#download) page can be used to access | ||

| and manipulate all annotations. | ||

|

|

||

| The annotations file structure is outlined below: | ||

| ``` | ||

| { | ||

| "images" : [image], | ||

| "annotations" : [annotation], | ||

| "categories" : [category] | ||

| } | ||

| image { | ||

| "coco_url" : str, | ||

| "date_captured" : datetime, | ||

| "file_name" : str, | ||

| "flickr_url" : str, | ||

| "id" : int, | ||

| "width" : int, | ||

| "height" : int, | ||

| "license" : int | ||

| } | ||

| annotation { | ||

| "area": float, | ||

| "bbox": [x, y, width, height], | ||

| "category_id": int, | ||

| "dp_I": [float], | ||

| "dp_U": [float], | ||

| "dp_V": [float], | ||

| "dp_masks": [dp_mask], | ||

| "dp_x": [float], | ||

| "dp_y": [float], | ||

| "id": int, | ||

| "image_id": int, | ||

| "iscrowd": 0 or 1, | ||

| "keypoints": [float], | ||

| "segmentation": RLE or [polygon] | ||

| } | ||

| category { | ||

| "id" : int, | ||

| "name" : str, | ||

| "supercategory" : str, | ||

| "keypoints": [str], | ||

| "skeleton": [edge] | ||

| } | ||

| dp_mask { | ||

| "counts": str, | ||

| "size": [int, int] | ||

| } | ||

| ``` | ||

|

|

||

| Each dense pose annotation contains a series of fields, including the category | ||

| id and segmentation mask of the person. The segmentation format depends on | ||

| whether the instance represents a single object (`iscrowd=0` in which case | ||

| polygons are used) or a collection of objects (`iscrowd=1` in which case RLE | ||

| is used). Note that a single object (`iscrowd=0`) may require multiple polygons, | ||

| for example if occluded. Crowd annotations (`iscrowd=1`) are used to label large | ||

| groups of objects (e.g. a crowd of people). In addition, an enclosing bounding | ||

| box is provided for each person (box coordinates are measured from the top left | ||

| image corner and are 0-indexed). | ||

|

|

||

| The categories field of the annotation structure stores the mapping of category | ||

| id to category and supercategory names. It also has two fields: "keypoints", | ||

| which is a length `k` array of keypoint names, and "skeleton", which defines | ||

| connectivity via a list of keypoint edge pairs and is used for visualization. | ||

|

|

||

| DensePose annotations are stored in `dp_*` fields: | ||

|

|

||

| *Annotated masks*: | ||

|

|

||

| * `dp_masks`: RLE encoded dense masks. All part masks are of size 256x256. | ||

| They correspond to 14 semantically meaningful parts of the body: `Torso`, | ||

| `Right Hand`, `Left Hand`, `Left Foot`, `Right Foot`, `Upper Leg Right`, | ||

| `Upper Leg Left`, `Lower Leg Right`, `Lower Leg Left`, `Upper Arm Left`, | ||

| `Upper Arm Right`, `Lower Arm Left`, `Lower Arm Right`, `Head`; | ||

|

|

||

| *Annotated points*: | ||

|

|

||

| * `dp_x`, `dp_y`: spatial coordinates of collected points on the image. | ||

| The coordinates are scaled such that the bounding box size is 256x256; | ||

| * `dp_I`: The patch index that indicates which of the 24 surface patches the | ||

| point is on. Patches correspond to the body parts described above. Some | ||

| body parts are split into 2 patches: `1, 2 = Torso`, `3 = Right Hand`, | ||

| `4 = Left Hand`, `5 = Left Foot`, `6 = Right Foot`, `7, 9 = Upper Leg Right`, | ||

| `8, 10 = Upper Leg Left`, `11, 13 = Lower Leg Right`, `12, 14 = Lower Leg Left`, | ||

| `15, 17 = Upper Arm Left`, `16, 18 = Upper Arm Right`, `19, 21 = Lower Arm Left`, | ||

| `20, 22 = Lower Arm Right`, `23, 24 = Head`; | ||

| * `dp_U`, `dp_V`: Coordinates in the UV space. Each surface patch has a | ||

| separate 2D parameterization. | ||

|

|

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,71 @@ | ||

| # DensePose Evaluation | ||

|

|

||

| This page describes the DensePose evaluation metrics used by COCO. The | ||

| evaluation code provided here can be used to obtain results on the publicly | ||

| available COCO DensePose validation set. It computes multiple metrics | ||

| described below. To obtain results on the COCO DensePose test set, for which | ||

| ground-truth annotations are hidden, generated results must be uploaded to | ||

| the evaluation server. The exact same evaluation code, described below, is | ||

| used to evaluate results on the test set. | ||

|

|

||

| ## Evaluation Overview | ||

|

|

||

| The multi-person DensePose task involves simultaneous person detection and | ||

| estimation of correspondences between image pixels that belong to a human body | ||

| and a template 3D model. DensePose evaluation mimics the evaluation metrics | ||

| used for [object detection](http://cocodataset.org/#detection-eval) and | ||

| [keypoint estimation](http://cocodataset.org/#keypoints-eval) in the COCO | ||

| challenge, namely average precision (AP) and average recall (AR) and their | ||

| variants. | ||

|

|

||

| At the heart of these metrics is a similarity measure between ground truth | ||

| objects and predicted objects. In the case of object detection, | ||

| *Intersection over Union* (IoU) serves as this similarity measure (for both | ||

| boxes and segments). Thesholding the IoU defines matches between the ground | ||

| truth and predicted objects and allows computing precision-recall curves. | ||

| In the case of keypoint detection *Object Keypoint Similarity* (OKS) is used. | ||

|

|

||

| To adopt AP/AR for dense correspondence, we define an analogous similarity | ||

| measure called *Geodesic Point Similarity* (GPS) which plays the same role | ||

| as IoU for object detection and OKS for keypoint estimation. | ||

|

|

||

| ## Geodesic Point Similarity | ||

|

|

||

| The geodesic point similarity (GPS) is based on geodesic distances on the template mesh between the collected groundtruth points and estimated surface coordinates for the same image points as follows: | ||

|

|

||

| <a href="https://www.codecogs.com/eqnedit.php?latex=\text{GPS}&space;=&space;\frac{1}{|P|}\sum_{p_i&space;\in&space;P}\exp\left&space;(\frac{-{d(\hat{p}_i,p_i)}^2}{2\kappa(p_i)^2}\right)," target="_blank"> | ||

| <img src="https://latex.codecogs.com/gif.latex?\text{GPS}&space;=&space;\frac{1}{|P|}\sum_{p_i&space;\in&space;P}\exp\left&space;(\frac{-{d(\hat{p}_i,p_i)}^2}{2\kappa(p_i)^2}\right)," | ||

| title="https://www.codecogs.com/eqnedit.php?latex=\text{GPS} = \frac{1}{|P|}\sum_{p_i \in P}\exp\left(\frac{-{d(\hat{p}_i,p_i)}^2}{2\kappa(p_i)^2}\right)," /></a> | ||

|

|

||

| where <a href="https://www.codecogs.com/eqnedit.php?latex=&space;d(\hat{p}_i,p_i)&space;" target="_blank"><img src="https://latex.codecogs.com/gif.latex?&space;d(\hat{p}_i,p_i)&space;" title="https://www.codecogs.com/eqnedit.php?latex=d(\hat{p}_i,p_i)" /></a> is the geodesic distance between estimated | ||

| (<a href="https://www.codecogs.com/eqnedit.php?latex=\hat{p}_i" target="_blank"> <img src="https://latex.codecogs.com/gif.latex?\hat{p}_i" title="https://www.codecogs.com/eqnedit.php?latex=\hat{p}_i" /></a>) and groundtruth | ||

| (<a href="https://www.codecogs.com/eqnedit.php?latex=p_i" target="_blank"><img src="https://latex.codecogs.com/gif.latex?p_i" title="https://www.codecogs.com/eqnedit.php?latex=p_i" /></a>) | ||

| human body surface points and | ||

| <a href="https://www.codecogs.com/eqnedit.php?latex=\kappa(p_i)" target="_blank"><img src="https://latex.codecogs.com/gif.latex?\kappa(p_i)" title="https://www.codecogs.com/eqnedit.php?latex=\kappa(p_i)" /></a> | ||

| is a per-part normalization factor, defined as the mean geodesic distance between points on the part. Please note that due to the new per-part normalization the AP numbers do not match those reported in the paper, which are obtained via fixed K = 0.255. | ||

|

|

||

| ## Metrics | ||

|

|

||

| The following metrics are used to characterize the performance of a dense pose | ||

| estimation algorithm on COCO: | ||

|

|

||

| *Average Precision* | ||

| ``` | ||

| AP % AP averaged over GPS values 0.5 : 0.05 : 0.95 (primary challenge metric) | ||

| AP-50 % AP at GPS=0.5 (loose metric) | ||

| AP-75 % AP at GPS=0.75 (strict metric) | ||

| AP-m % AP for medium detections: 32² < area < 96² | ||

| AP-l % AP for large detections: area > 96² | ||

| ``` | ||

|

|

||

| ## Evaluation Code | ||

|

|

||

| Evaluation code is available on the | ||

| [DensePose](https://github.com/facebookresearch/DensePose/) github, | ||

| see [densepose_cocoeval.py](https://github.com/facebookresearch/DensePose/blob/master/detectron/datasets/densepose_cocoeval.py). | ||

| Before running the evaluation code, please prepare your results in the format | ||

| described on the [results](results_format.md) format page. | ||

| The geodesic distances are pre-computed on a subsampled version of the SMPL | ||

| model to allow faster evaluation. Geodesic distances are computed after | ||

| finding the closest vertices to the estimated UV values in the subsampled mesh. | ||

|

|

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,90 @@ | ||

| # COCO 2018 DensePose Task | ||

|

|

||

|  | ||

|

|

||

| ## Overview | ||

|

|

||

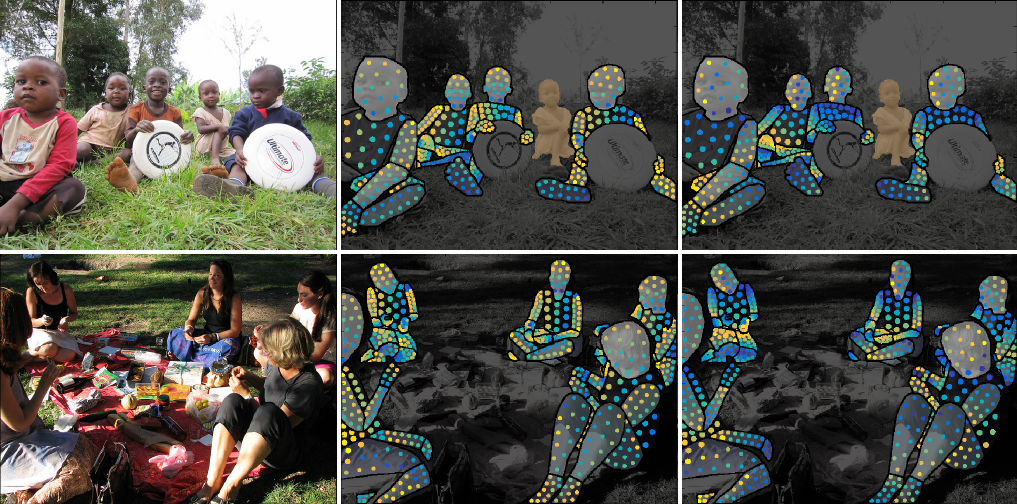

| The COCO DensePose Task requires dense estimation of human pose in challenging, | ||

| uncontrolled conditions. The DensePose task involves simultaneously detecting | ||

| people, segmenting their bodies and mapping all image pixels that belong to a | ||

| human body to the 3D surface of the body. For full details of this task please | ||

| see the [DensePose evaluation](evaluation.md) page. | ||

|

|

||

| This task is part of the | ||

| [Joint COCO and Mapillary Recognition Challenge Workshop](http://cocodataset.org/workshop/coco-mapillary-iccv-2019.html) | ||

| at ECCV 2018. For further details about the joint workshop please | ||

| visit the workshop page. Please also see the related COCO | ||

| [detection](http://cocodataset.org/workshop/coco-mapillary-iccv-2019.html#coco-detection), | ||

| [panoptic](http://cocodataset.org/workshop/coco-mapillary-iccv-2019.html#coco-panoptic) | ||

| and | ||

| [keypoints](http://cocodataset.org/workshop/coco-mapillary-iccv-2019.html#coco-keypoints) | ||

| tasks. | ||

|

|

||

| The COCO train, validation, and test sets, containing more than 39,000 images | ||

| and 56,000 person instances labeled with DensePose annotations are available | ||

| for [download](http://cocodataset.org/#download). | ||

| Annotations on train ( | ||

| [train 1](https://dl.fbaipublicfiles.com/densepose/densepose_coco_2014_train.json), | ||

| [train 2](https://dl.fbaipublicfiles.com/densepose/densepose_coco_2014_valminusminival.json) | ||

| ) and [val](https://dl.fbaipublicfiles.com/densepose/densepose_coco_2014_minival.json) | ||

| with over 48,000 people are publicly available. | ||

| [Test set](https://dl.fbaipublicfiles.com/densepose/densepose_coco_2014_test.json) | ||

| with the list of images is also available for download. | ||

|

|

||

| Evaluation server for the 2019 task is | ||

| [open](https://competitions.codalab.org/competitions/20660). | ||

|

|

||

| ## Dates | ||

|

|

||

| []() | []() | ||

| ---- | ----- | ||

| **October 4, 2019** | Submission deadline (23:59 PST) | ||

| October 11, 2019 | Technical report submission deadline | ||

| October 18, 2019 | Challenge winners notified | ||

| October 27, 2019 | Winners present at ICCV 2019 Workshop | ||

|

|

||

| ## Organizers | ||

|

|

||

| Riza Alp Güler (INRIA, CentraleSupélec) | ||

|

|

||

| Natalia Neverova (Facebook AI Research) | ||

|

|

||

| Vasil Khalidov (Facebook AI Research) | ||

|

|

||

| Iasonas Kokkinos (Facebook AI Research) | ||

|

|

||

| ## Task Guidelines | ||

|

|

||

| Participants are recommended but not restricted to train | ||

| their algorithms on COCO DensePose train and val sets. | ||

| The [download](http://cocodataset.org/#download) page has | ||

| links to all COCO data. When participating in this task, | ||

| please specify any and all external data used for training | ||

| in the "method description" when uploading results to the | ||

| evaluation server. A more thorough explanation of all these | ||

| details is available on the | ||

| [guidelines](http://cocodataset.org/#guidelines) page, | ||

| please be sure to review it carefully prior to participating. | ||

| Results in the [correct format](results_format.md) must be | ||

| [uploaded](upload.md) to the | ||

| [evaluation server](https://competitions.codalab.org/competitions/20660). | ||

| The [evaluation](evaluation.md) page lists detailed information | ||

| regarding how results will be evaluated. Challenge participants | ||

| with the most successful and innovative methods will be invited | ||

| to present at the workshop. | ||

|

|

||

| ## Tools and Instructions | ||

|

|

||

| We provide extensive API support for the COCO images, | ||

| annotations, and evaluation code. To download the COCO DensePose API, | ||

| please visit our | ||

| [GitHub repository](https://github.com/facebookresearch/DensePose/). | ||

| Due to the large size of COCO and the complexity of this task, | ||

| the process of participating may not seem simple. To help, we provide | ||

| explanations and instructions for each step of the process: | ||

| [download](http://cocodataset.org/#download), | ||

| [data format](data_format.md), | ||

| [results format](results_format.md), | ||

| [upload](upload.md) and [evaluation](evaluation.md) pages. | ||

| For additional questions, please contact [email protected]. | ||

|

|

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,42 @@ | ||

| # Results Format | ||

|

|

||

| This page describes the results format used by COCO DensePose evaluation | ||

| procedure. The results format mimics the annotation format detailed on | ||

| the [data format](data_format.md) page. Please review the annotation | ||

| format before proceeding. | ||

|

|

||

| Each algorithmically generated result is stored separately in its own | ||

| result struct. This singleton result struct must contain the id of the | ||

| image from which the result was generated (a single image will typically | ||

| have multiple associated results). Results for the whole dataset are | ||

| aggregated in a single array. Finally, this entire result struct array | ||

| is stored to disk as a single JSON file (saved via | ||

| [gason](https://github.com/cocodataset/cocoapi/blob/master/MatlabAPI/gason.m) | ||

| in Matlab or [json.dump](https://docs.python.org/2/library/json.html) in Python). | ||

|

|

||

| Example result JSON files are available in | ||

| [example results](example_results.json). | ||

|

|

||

| The data struct for each of the result types is described below. The format | ||

| of the individual fields below (`category_id`, `bbox`, etc.) is the same as | ||

| for the annotation (for details see the [data format](data_format.md) page). | ||

| Bounding box coordinates `bbox` are floats measured from the top left image | ||

| corner (and are 0-indexed). We recommend rounding coordinates to the nearest | ||

| tenth of a pixel to reduce the resulting JSON file size. The dense estimates | ||

| of patch indices and coordinates in the UV space for the specified bounding | ||

| box are stored in `uv_shape` and `uv_data` fields. | ||

| `uv_shape` contains the shape of `uv_data` array, it should be of size | ||

| `(3, height, width)`, where `height` and `width` should match the bounding box | ||

| size. `uv_data` should contain PNG-compressed patch indices and U and V | ||

| coordinates scaled to the range `0-255`. | ||

|

|

||

| An example of code that generates results in the form of a `pkl` file can | ||

| be found in | ||

| [json_dataset_evaluator.py](https://github.com/facebookresearch/DensePose/blob/master/detectron/datasets/json_dataset_evaluator.py). | ||

| We also provide an [example script](../encode_results_for_competition.py) to convert | ||

| dense pose estimation results stored in a `pkl` file into a PNG-compressed | ||

| JSON file. | ||

|

|

||

|

|

||

|

|

||

|

|

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,64 @@ | ||

| # Upload Results to Evaluation Server | ||

|

|

||

| This page describes the upload instructions for submitting results to the | ||

| evaluation servers for the COCO DensePose challenge. Submitting results allows | ||

| you to participate in the challenges and compare results to the | ||

| state-of-the-art on the public leaderboards. Note that you can obtain results | ||

| on val by running the | ||

| [evaluation code](https://github.com/facebookresearch/DensePose/blob/master/detectron/datasets/densepose_cocoeval.py) | ||

| locally. One can also take advantage of the | ||

| [vkhalidov/densepose-codalab](https://hub.docker.com/r/vkhalidov/densepose-codalab/) | ||

| docker image which was tailored specifically for evaluation. | ||

| Submitting to the evaluation server provides results on the val and | ||

| test sets. We now give detailed instructions for submitting to the evaluation | ||

| server: | ||

|

|

||

| 1. Create an account on CodaLab. This will allow you to participate in all COCO challenges. | ||

|

|

||

| 2. Carefully review the [guidelines](http://cocodataset.org/#guidelines) for | ||

| entering the COCO challenges and using the test sets. | ||

|

|

||

| 3. Prepare a JSON file containing your results in the correct | ||

| [results format](results_format.md) for the challenge you wish to enter. | ||

|

|

||

| 4. File naming: the JSON file should be named `densepose_[subset]_[alg]_results.json`. | ||

| Replace `[subset]` with the subset you are using (`val` or `test`), | ||

| and `[alg]` with your algorithm name. Finally, place the JSON | ||

| file into a zip file named `densepose_[subset]_[alg]_results.zip`. | ||

|

|

||

| 5. To submit your zipped result file to the COCO DensePose Challenge, click on | ||

| the “Participate” tab on the | ||

| [CodaLab evaluation server](https://competitions.codalab.org/competitions/20660) page. | ||

| When you select “Submit / View Results” on the left panel, you will be able to choose | ||

| the subset. Please fill in the required fields and click “Submit”. A pop-up will | ||

| prompt you to select the results zip file for upload. After the file is uploaded | ||

| the evaluation server will begin processing. To view the status of your submission | ||

| please select “Refresh Status”. Please be patient, the evaluation may take quite | ||

| some time to complete (from ~20m to a few hours). If the status of your submission | ||

| is “Failed” please check your file is named correctly and has the right format. | ||

|

|

||

| 6. Please enter submission information into Codalab. The most important fields | ||

| are "Team name", "Method description", and "Publication URL", which are used | ||

| to populate the COCO leaderboard. Additionally, under "user setting" in the | ||

| upper right, please add "Team members". There have been issues with the | ||

| "Method Description", we may collect these via email if necessary. These | ||

| settings are not intuitive, but we have no control of the Codalab website. | ||

| For the "Method description", especially for COCO DensePose challenge entries, | ||

| we encourage participants to give detailed method information that will help | ||

| the award committee invite participants with the most innovative methods. | ||

| Listing external data used is mandatory. You may also consider giving some | ||

| basic performance breakdowns on test subset (e.g., single model versus | ||

| ensemble results), runtime, or any other information you think may be pertinent | ||

| to highlight the novelty or efficacy of your method. | ||

|

|

||

| 7. After you submit your results to the test-dev eval server, you can control | ||

| whether your results are publicly posted to the CodaLab leaderboard. To toggle | ||

| the public visibility of your results please select either “post to leaderboard” | ||

| or “remove from leaderboard”. Only one result can be published to the leaderboard | ||

| at any time. | ||

|

|

||

| 8. After evaluation is complete and the server shows a status of “Finished”, | ||

| you will have the option to download your evaluation results by selecting | ||

| “Download evaluation output from scoring step.” The zip file will contain the | ||

| score file `scores.txt`. | ||

|

|