VITS2: Improving Quality and Efficiency of Single-Stage Text-to-Speech with Adversarial Learning and Architecture Design

Unofficial implementation of the VITS2 paper, sequel to VITS paper. (thanks to the authors for their work!)

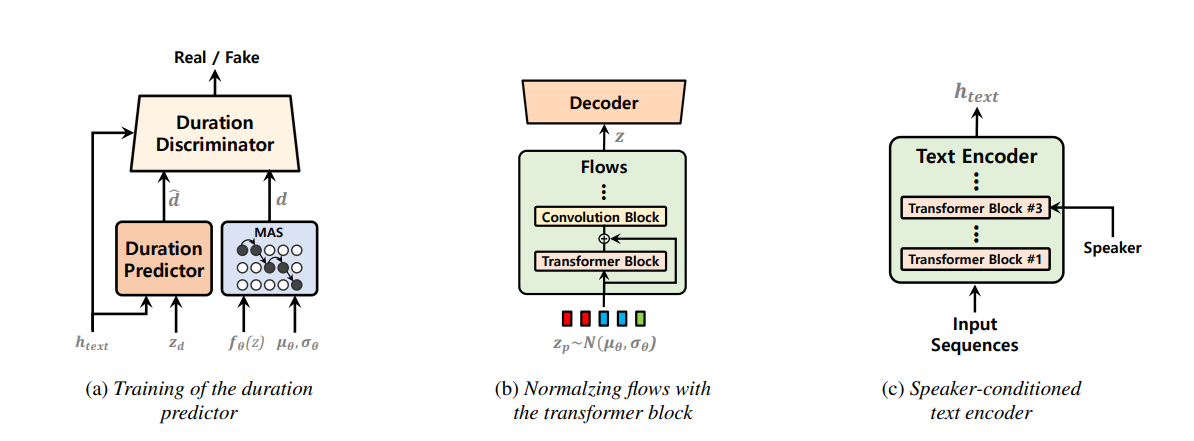

Single-stage text-to-speech models have been actively studied recently, and their results have outperformed two-stage pipeline systems. Although the previous single-stage model has made great progress, there is room for improvement in terms of its intermittent unnaturalness, computational efficiency, and strong dependence on phoneme conversion. In this work, we introduce VITS2, a single-stage text-to-speech model that efficiently synthesizes a more natural speech by improving several aspects of the previous work. We propose improved structures and training mechanisms and present that the proposed methods are effective in improving naturalness, similarity of speech characteristics in a multi-speaker model, and efficiency of training and inference. Furthermore, we demonstrate that the strong dependence on phoneme conversion in previous works can be significantly reduced with our method, which allows a fully end-toend single-stage approach.

- We will build this repo based on the VITS repo. The goal is to make this model easier to transfer learning from VITS pretrained model!

- (08-17-2023) - The authors were really kind to guide me through the paper and answer my questions. I am open to discuss any changes or answer questions regarding the implementation. Please feel free to open an issue or contact me directly.

- LJSpeech-no-sdp (refer to config.yaml in this checkppoint folder) | 64k steps | proof that training works! Would recommend experts to rename the ckpts to *_0.pth and starting the training using transfer learning. (I will add a notebook for this soon to help beginers).

- Check 'Discussion' page for training logs and tensorboard links and other community contributions.

- Russian trained model samples #32. Thanks to @shigabeev for sharing the samples.

- Some samples on non-native EN dataset discussion page. Thanks to @athenasaurav for using his private GPU resources and dataset!

- Added sample audio @104k steps. ljspeech-nosdp ; tensorboard

- vietnamese samples Thanks to @ductho9799 for sharing!

- Python >= 3.8

- Tested on Pytorch version 1.13.1 with Google Colab and LambdaLabs cloud.

- Clone this repository

- Install python requirements. Please refer requirements.txt

You may need to install espeak first:apt-get install espeak- I used DeepPhonemizer to process text to ipa, please download the model for DeepPhonemizer here and place it in Vits_Pytorch_2 root directory.

- Download datasets

- Download and extract the LJ Speech dataset, then rename or create a link to the dataset folder:

ln -s /path/to/LJSpeech-1.1/wavs DUMMY1 - For mult-speaker setting, download and extract the VCTK dataset, and downsample wav files to 22050 Hz. Then rename or create a link to the dataset folder:

ln -s /path/to/VCTK-Corpus/downsampled_wavs DUMMY2

- Download and extract the LJ Speech dataset, then rename or create a link to the dataset folder:

- Build Monotonic Alignment Search and run preprocessing if you use your own datasets.

# Cython-version Monotonoic Alignment Search

cd monotonic_align

python setup.py build_ext --inplace

# Preprocessing (g2p) for your own datasets. Preprocessed phonemes for LJ Speech and VCTK have been already provided.

# python preprocess.py --text_index 1 --filelists filelists/ljs_audio_text_train_filelist.txt filelists/ljs_audio_text_val_filelist.txt filelists/ljs_audio_text_test_filelist.txt

# python preprocess.py --text_index 2 --filelists filelists/vctk_audio_sid_text_train_filelist.txt filelists/vctk_audio_sid_text_val_filelist.txt filelists/vctk_audio_sid_text_test_filelist.txt- model forward pass (dry-run)

import torch

from models import SynthesizerTrn

net_g = SynthesizerTrn(

n_vocab=256,

spec_channels=80, # <--- vits2 parameter (changed from 513 to 80)

segment_size=8192,

inter_channels=192,

hidden_channels=192,

filter_channels=768,

n_heads=2,

n_layers=6,

kernel_size=3,

p_dropout=0.1,

resblock="1",

resblock_kernel_sizes=[3, 7, 11],

resblock_dilation_sizes=[[1, 3, 5], [1, 3, 5], [1, 3, 5]],

upsample_rates=[8, 8, 2, 2],

upsample_initial_channel=512,

upsample_kernel_sizes=[16, 16, 4, 4],

n_speakers=0,

gin_channels=0,

use_sdp=True,

use_transformer_flows=True, # <--- vits2 parameter

# (choose from "pre_conv", "fft", "mono_layer_inter_residual", "mono_layer_post_residual")

transformer_flow_type="fft", # <--- vits2 parameter

use_spk_conditioned_encoder=True, # <--- vits2 parameter

use_noise_scaled_mas=True, # <--- vits2 parameter

use_duration_discriminator=True, # <--- vits2 parameter

)

x = torch.LongTensor([[1, 2, 3],[4, 5, 6]]) # token ids

x_lengths = torch.LongTensor([3, 2]) # token lengths

y = torch.randn(2, 80, 100) # mel spectrograms

y_lengths = torch.Tensor([100, 80]) # mel spectrogram lengths

net_g(

x=x,

x_lengths=x_lengths,

y=y,

y_lengths=y_lengths,

)

# calculate loss and backpropagate# LJ Speech

python train.py -c configs/vits2_ljs_nosdp.json -m ljs_base # no-sdp; (recommended)

python train.py -c configs/vits2_ljs_base.json -m ljs_base # with sdp;

# VCTK

python train_ms.py -c configs/vits2_vctk_base.json -m vctk_base

# for onnx export of trained models

python export_onnx.py --model-path="G_64000.pth" --config-path="config.json" --output="vits2.onnx"

python infer_onnx.py --model="vits2.onnx" --config-path="config.json" --output-wav-path="output.wav" --text="hello world, how are you?"- Added LSTM discriminator to duration predictor.

- Added adversarial loss to duration predictor. ("use_duration_discriminator" flag in config file; default is "True")

- Monotonic Alignment Search with Gaussian Noise added; might need expert verification (Section 2.2)

- Added "use_noise_scaled_mas" flag in config file. Choose from True or False; updates noise while training based on number of steps and never goes below 0.0

- Update models.py/train.py/train_ms.py

- Update config files (vits2_vctk_base.json; vits2_ljs_base.json)

- Update losses in train.py and train_ms.py

- Added transformer block to the normalizing flow. There are three types of transformer blocks: pre-convolution (my implementation), FFT (from so-vits-svc repo) and mono-layer.

- Added "transformer_flow_type" flag in config file. Choose from "pre_conv", "fft", "mono_layer_inter_residual", "mono_layer_post_residual".

- Added layers and blocks in models.py (ResidualCouplingTransformersLayer, ResidualCouplingTransformersBlock, FFTransformerCouplingLayer, MonoTransformerFlowLayer)

- Add in config file (vits2_ljs_base.json; can be turned on using "use_transformer_flows" flag)

- Added speaker embedding to the text encoder in models.py (TextEncoder; backward compatible with VITS)

- Add in config file (vits2_ljs_base.json; can be turned on using "use_spk_conditioned_encoder" flag)

- Added mel spectrogram posterior encoder in train.py

- Addded new config file (vits2_ljs_base.json; can be turned on using "use_mel_posterior_encoder" flag)

- Updated 'data_utils.py' to use the "use_mel_posterior_encoder" flag for vits2

- Added vits2 flags to train.py (single-speaer model)

- Added vits2 flags to train_ms.py (multi-speaker model)

- Add ONNX export support.

- Add Gradio demo support.

- @erogol for quick feedback and guidance. (Please check his awesome CoquiTTS repo).

- @lexkoro for discussions and help with the prototype training.

- @manmay-nakhashi for discussions and help with the code.

- @athenasaurav for offering GPU support for training.

- @w11wo for ONNX support.

- @Subarasheese for Gradio UI.