- 🌟 We will release the GeoGround demo, code and datasets as soon as possible. 🌟

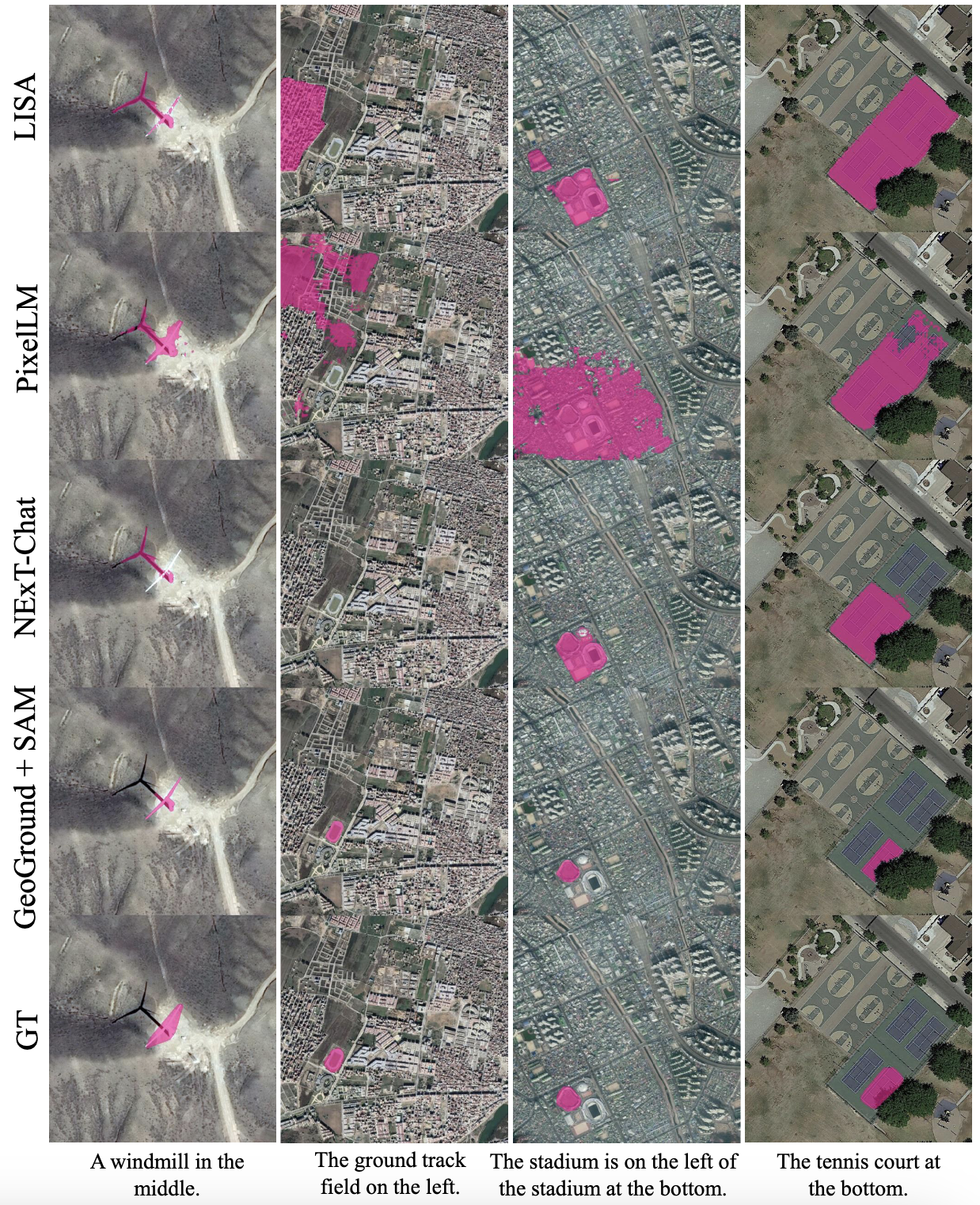

Remote sensing (RS) visual grounding aims to use natural language expression to locate specific objects (in the form of the bounding box or segmentation mask) in RS images, enhancing human interaction with intelligent RS interpretation systems. Early research in this area was primarily based on horizontal bounding boxes (HBBs), but as more diverse RS datasets have become available, tasks involving oriented bounding boxes (OBBs) and segmentation masks have emerged. In practical applications, different targets require different grounding types: HBB can localize an object's position, OBB provides its orientation, and mask depicts its shape. However, existing specialized methods are typically tailored to a single type of RS visual grounding task and are hard to generalize across tasks. In contrast, large vision-language models (VLMs) exhibit powerful multi-task learning capabilities but struggle to handle dense prediction tasks like segmentation. This paper proposes GeoGround, a novel framework that unifies support for HBB, OBB, and mask RS visual grounding tasks, allowing flexible output selection. Rather than customizing the architecture of VLM, our work aims to elegantly support pixel-level visual grounding output through the Text-Mask technique. We define prompt-assisted and geometry-guided learning to enhance consistency across different signals. To support model training, we present refGeo, a large-scale RS visual instruction-following dataset containing 161k image-text pairs. Experimental results show that GeoGround demonstrates strong performance across four RS visual grounding tasks, matching or surpassing the performance of specialized methods on multiple benchmarks.

-

Framework. We propose GeoGround, a novel VLM framework that unifies box-level and pixel-level RS visual grounding tasks while maintaining its inherent dialogue and image understanding capabilities.

-

Dataset. We introduce refGeo, the largest RS visual grounding instruction-following dataset, consisting of 161k image-text pairs and 80k RS images, including a new 3D-aware aerial vehicle visual grounding dataset.

-

Benchmark. We conduct extensive experiments on various RS visual grounding tasks, providing valuable insights for future RS VLM research and opening new avenues for research in RS visual grounding.

We propose the Text-Mask paradigm, which distills and compresses the information embedded in the mask into a compact text sequence that can be efficiently learned by VLMs. Additionally, we introduce hybrid supervision, which incorporates prompt-assisted learning (PAL) and geometry-guided learning (GGL) to fine-tune the model using three types of signals, ensuring output consistency and enhancing the model’s understanding of the relationships between different grounding types.

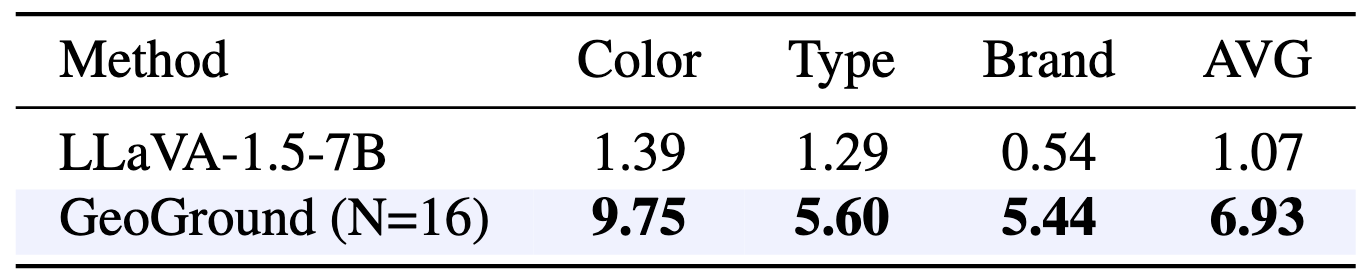

We introduce refGeo, a large-scale RS visual grounding instruction-following dataset. It consolidates four existing visual grounding datasets from RS and introduces a new aerial vehicle visual grounding dataset (AVVG). AVVG extends traditional 2D visual grounding to a 3D context, enabling VLMs to perceive 3D space from 2D aerial imagery. For each referred object, we provide HBB, OBB, and mask, with the latter automatically generated by the SAM.

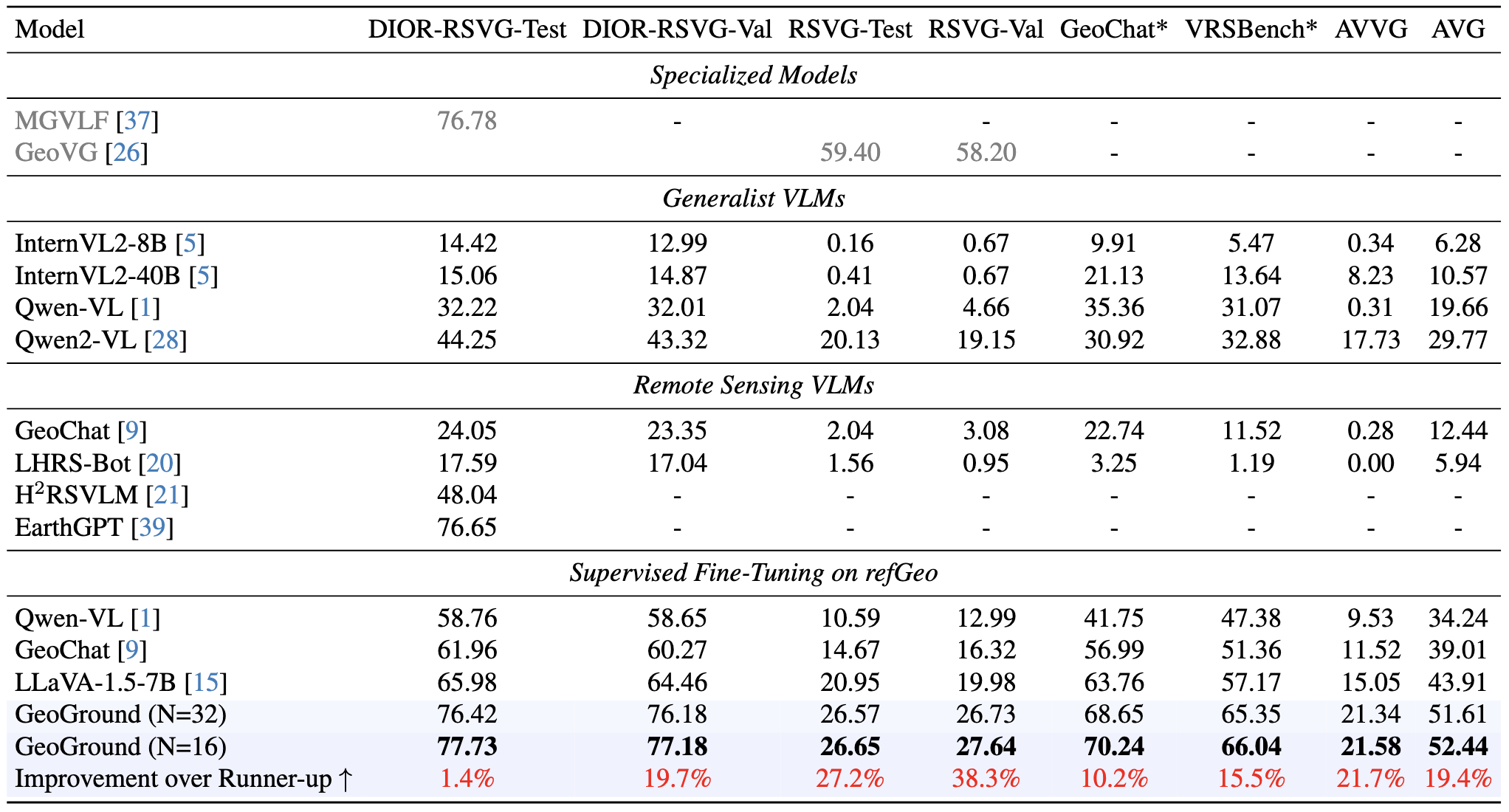

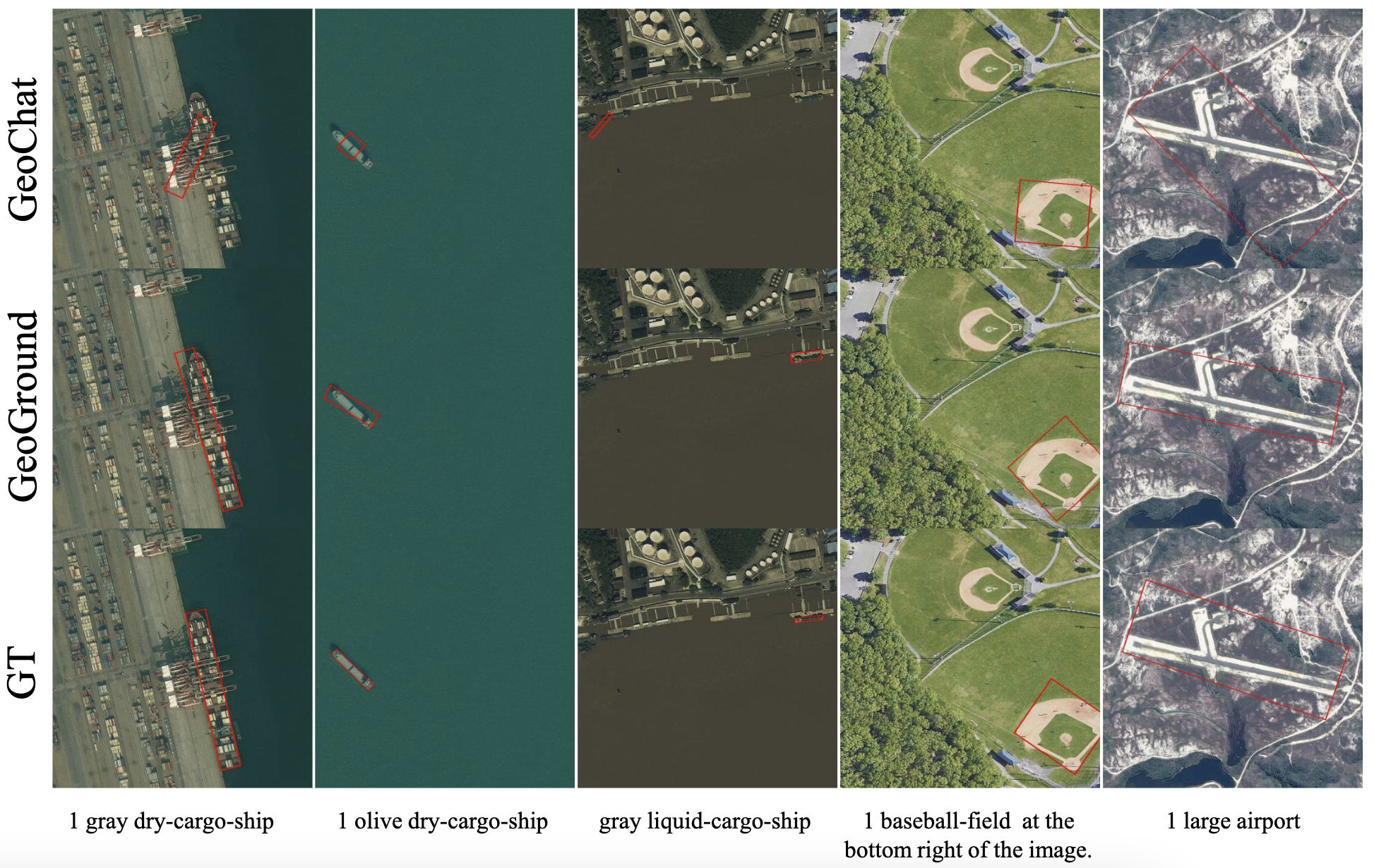

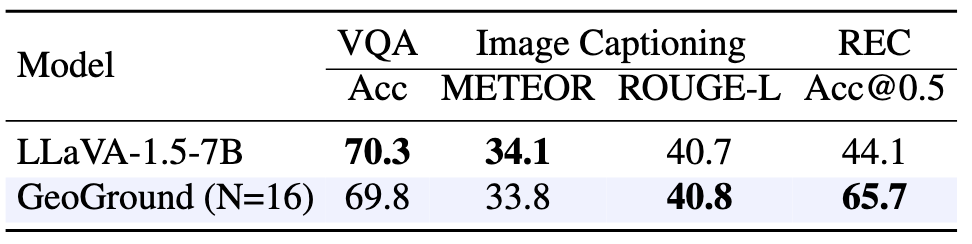

GeoGround achieves the best performance across all REC benchmarks, surpassing the specialized model on the DIOR-RSVG test set. Benefiting from the wide range of image resolutions and GSD in refGeo, the fine-tuned model showed significant performance improvements on datasets with a high proportion of small objects, such as RSVG and AVVG.

The results demonstrate GeoGround's dominance in RS visual grounding tasks based on OBB, further validating the effectiveness of our hybrid supervision approach.

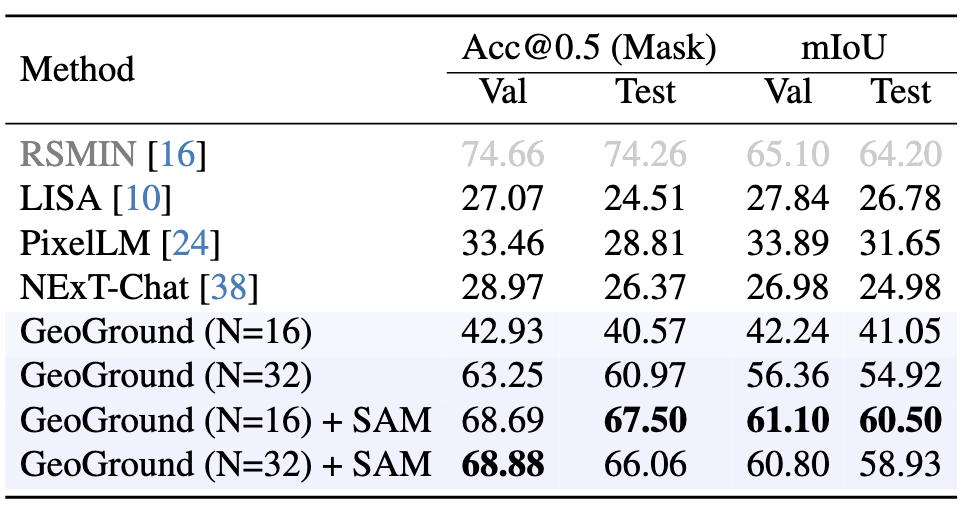

Unlike the other VLMs, GeoGround does not require the introduction of an additional mask decoder, as it inherently possesses segmentation capabilities. Moreover, we attempt to use SAM to refine the coarse masks generated by GeoGround, which allowed GeoGround to achieve results that match the performance of the best RS referring segmentation model.

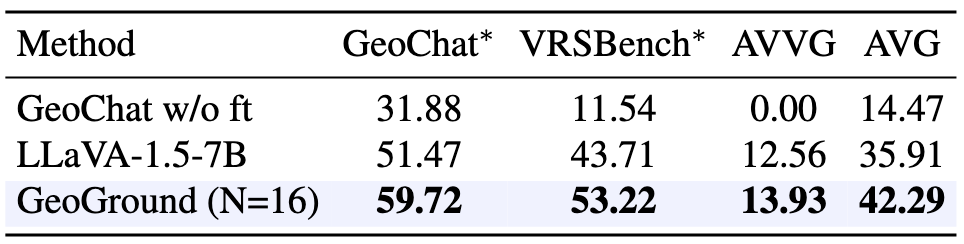

We present an RS Generalized REC benchmark based on AVVG, which differs from standard REC in that one referring expression may correspond to multiple objects.

Our approach enhances object-level understanding without compromising the holistic image comprehension capabilities of VLMs.

@misc{zhou2024geoground,

title={GeoGround: A Unified Large Vision-Language Model. for Remote Sensing Visual Grounding},

author={Yue Zhou and Mengcheng Lan and Xiang Li and Yiping Ke and Xue Jiang and Litong Feng and Wayne Zhang},

year={2024},

eprint={2411.11904},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2411.11904},

}